In the age of AI, it’s hard to predict the nuanced ways people will interact with technology, and even harder to control those interactions. It’s not just about who is accessing information, but how that information may be used in ways that could expose your organization to unexpected risks. Your AI security strategy has to be totally different from the security approaches you’re used to. It’s time to introduce content-based access control.

You may be familiar with role-based access control (RBAC), which assigns permissions and privileges to users based on their role in the organization. This model is popular among businesses due to its simplicity to implement. By adhering to the principle of least privilege, RBAC limits users to accessing only what they need, which helps maintain consistency and security.

While this method might work for controlling access to certain documents or information, it’s not a thorough enough fix for today’s AI risks.

Gen AI applications operate based on instructions provided using language and content, making it challenging to apply controls based on RBAC alone. A lack of controls is why many organizations find themselves in a “Block All” state as a means of managing these risks. Others have granted access to certain tools and attempted to govern internal AI use through their own organization-wide AI safe usage policies which are not enforceable.

However, blocking all access to Gen AI means missing out on its potential benefits to your organization, while some AI safe usage policies leave potential gray areas or uncovered ground. Some employees may engage in unsafe activity without realizing it’s in violation of the policy, or the policy simply may not cover it. In any case, the organization is put at risk.

Redefining Access for the AI Era

In addition to understanding who is making the request, a more thorough method of AI security involves controlling access based on the content of the requests and responses themselves. At Acuvity, we call this content-based access control (CBAC).

Like RBAC, CBAC is based on a predefined set of criteria established by the organization to safeguard information access. However, while RBAC may limit who in the organization has access to what data, documents, or intel in the first place, CBAC limits how those assets or information can be used. That includes tasks involving Gen AI. Acuvity combines elements of RBAC with thorough CBAC measures to ensure that AI security is fit for the way businesses and their people use Gen AI.

Because CBAC security controls are content and language based, access to Gen AI tools doesn’t need to be all-or-nothing. You can still provide access to these tools for staff in all roles, but limit what they can do within those applications. For example, a software developer and an HR manager could both have access to ChatGPT. However, only the HR manager would be allowed to conduct interactions regarding compensation data under the company’s CBAC policies, while the software engineer would be prevented from using the tool in this way. Using this construct, you can implement more effective policies and protections for safe AI usage.

This is much easier to manage. Access to Gen AI tools does not need to be granted situationally or frequently updated, nor would these tools need to be banned entirely. Instead of having to continuously manage the access levels of a large population of individuals, business leaders can establish overarching AI governance for their content that will automatically apply to all users, no exceptions.

How Does This AI Security Strategy Work in the Wild?

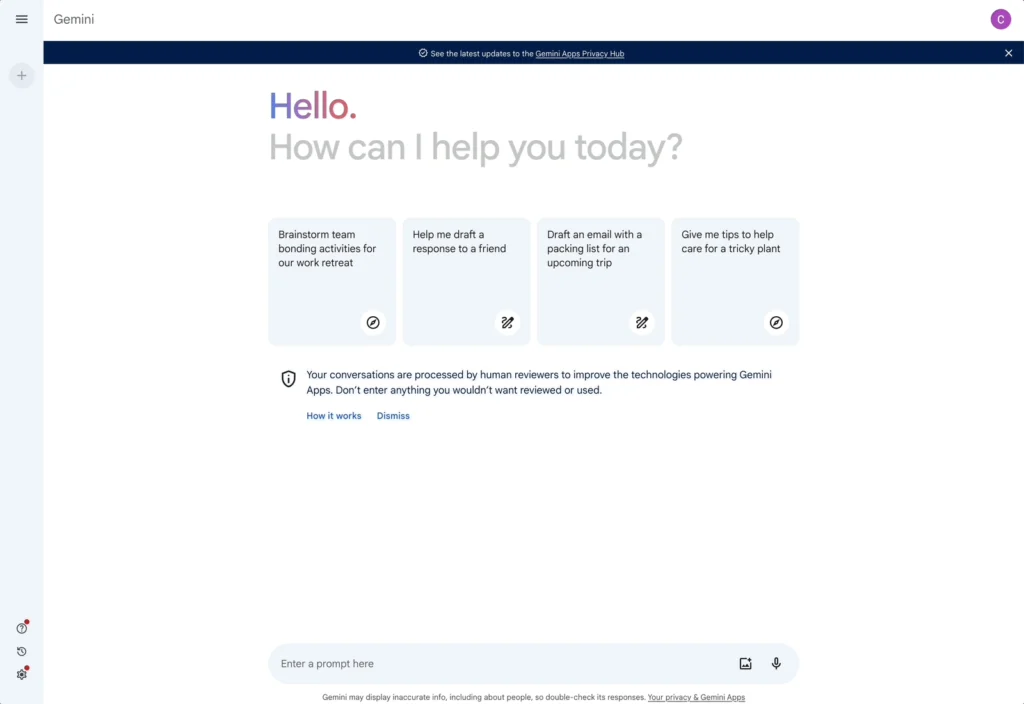

On its own, a Gen AI tool like ChatGPT knows only that you’ve input information, not that that information is sensitive or valuable to your organization. Gen AI tools have their own internal security measures but are not designed to inherently block users from inputting this content, simply because these tools have no clue that this information might matter to your business. So how do you provide Gen AI with enough context for CBAC to work?

We’re the first AI security provider to not only think about this, but protect it. Acuvity works as a buffer between Gen AI tools and your users to provide that context while ensuring that no sensitive data slips through the cracks. Our platform makes it possible to specify and enforce what tools can be used and for which prompts. You can decide what content can or cannot be input, and our platform will detect anything that is in violation and instantly issue a warning to the user.

There are likely things you’ll inherently want to block. For example, a tech company may choose to restrict any content that resembles code from being uploaded into ChatGPT but allow for code to be sent to Github Copilot. Our content and language-based controls don’t just apply to the prompts users type directly into the tool, though. Protection minimizes AI risks by extending to any assets they upload such as PDFs, documents, whiteboard photos, and so on.

To help maintain regulatory compliance, Acuvity automatically protects users from inadvertently generating prompts that violate regulations such as HIPAA, AICPA SOC, and GDPR, but it is ultimately up to you to decide what’s worth protecting for your business.

This is where visualization can be of major help. Once you have a grasp on how your people are using Gen AI tools and what they are inputting, it is easier to determine what’s worth protecting. The best, most thorough AI governance policies are those that reflect and consider real-world habits and use cases. Having a bird’s eye view of company-wide AI use, individual employee behaviors, and inappropriate Gen AI exchanges makes it possible to tailor your AI security strategy to the specific needs of your business.

You cannot be everywhere at once controlling what your people input into Gen AI tools, but Acuvity’s CBAC-based AI security solution can be. Get in touch with us to learn more.