We just released our inaugural State of AI Security report, based on research with 275 security and IT leaders across the United States.

The findings confirm what I’ve been observing in conversations with enterprise leaders: they’re struggling to secure and govern AI in ways they never struggled with previous technologies.

This isn’t a temporary gap that will close as the technology matures. The research reveals something more fundamental and urgent. AI has changed the nature of risk itself. Traditional security models assume you can define boundaries, monitor activity, and control access. AI operates outside these assumptions, and our current security tools weren’t built for technology that works this way.

What struck me most about the findings wasn’t any single statistic but what they collectively reveal about this moment. We’re watching an entire industry come to terms with a new reality. Security teams trained to defend boundaries are facing technology that recognizes no boundaries. Organizations built on clear ownership and accountability are deploying technology that belongs to everyone and no one. The careful balance between risk and control that enterprises spent decades perfecting has been completely disrupted.

In this blog, I’ll share my perspective on what these findings reveal about the state of AI security, why this moment requires us to have difficult conversations, and rethink our approach to enterprise risk.

The Reality of AI Governance Today

Seventy percent of organizations lack optimized AI governance. This finding sets the context for everything that follows. Organizations are deploying AI at scale while lacking the governance structures needed to manage it effectively.

To be clear, I’m not talking about policy governance: the documentation, compliance frameworks, and ethical guidelines that most organizations have already created. I’m talking about operational governance: the ability to enforce and monitor AI risk during operation. This means continuous risk assessment of AI models and agents, runtime enforcement of policies, and automated response to violations. It’s the difference between having policies that say what should happen and having the operational capability to make it happen.

Organizations have governance frameworks that work for traditional IT, but these same structures fail when applied to AI. The technology operates across every system simultaneously, through channels that existing governance wasn’t designed to oversee. Nearly 40% of organizations operate with ad hoc practices or no AI-specific governance at all.

The AI governance crisis helps explain the other patterns we found. Organizations expect incidents they can’t prevent. Ownership is fragmented across multiple teams. Runtime remains the most vulnerable yet least defended phase. Without operational governance and runtime enforcement, without the ability to see and control AI usage as it happens, these problems compound.

The Incidents Organizations See Coming

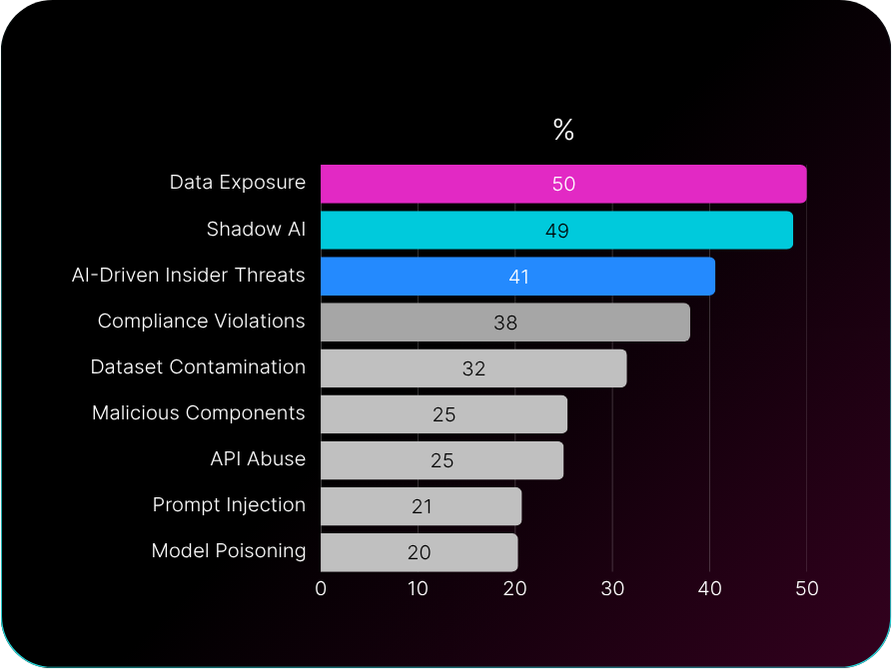

Fifty percent of organizations expect data leakage through AI tools within the next twelve months. Forty-nine percent anticipate Shadow AI incidents in the same timeframe. These organizations are predicting specific incidents they believe will occur.

Organizations see exactly what’s coming. They understand that employees will share sensitive data with AI tools, that Shadow AI will proliferate beyond their control, and that these activities will lead to incidents. They have visibility into the risk. What they lack are the tools and frameworks to prevent what they can clearly see approaching.

The gap between understanding AI’s risks and being able to address them has grown wider than anything we’ve experienced in enterprise security. Organizations can identify the threats AI creates. They can predict what types of incidents will occur and even estimate when. What they cannot do with current security tools is stop them.

Think about what this means for the security profession. We built our entire discipline on the premise that with the right tools, processes, and vigilance, we can prevent most bad outcomes. Now security teams are being asked to accept that certain incidents are inevitable and to focus on minimizing damage.

This shift from prevention to resilience represents a new chapter in how we think about enterprise risk.

The End of Traditional Ownership

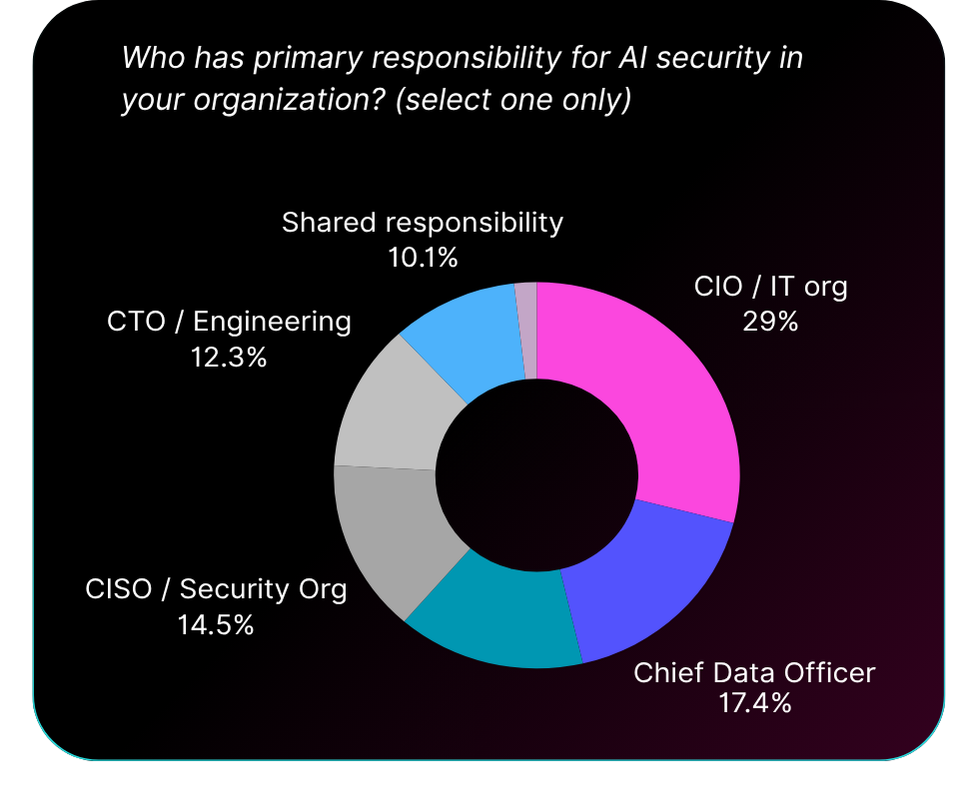

When we asked who owns AI security, CIOs came first at 29%. CISOs, who own every other security domain in the enterprise, ranked fourth at just 14.5%, behind Chief Data Officers (17%) and infrastructure teams (15%).

This reversal of traditional ownership tells us something important about AI. It doesn’t fit into existing security models where CISOs have clear authority and accountability. Instead, AI security has scattered across the organization. CIOs own it because AI lives in their applications. CDOs claim it because AI consumes their data. Infrastructure teams manage it because AI runs on their systems.

The fragmentation means no one has complete visibility or control. Each team sees their piece of AI operations but misses what’s happening in other domains. When incidents occur, accountability becomes unclear. Is the CIO responsible because they deployed the AI application? The CDO because they manage the data? The infrastructure team because they run the platform? The CISO who’s supposed to protect the enterprise but ranks fourth in ownership?

This ownership confusion connects directly to the governance crisis. Seventy percent of organizations lack optimized AI governance, and the fragmented ownership helps explain why. Without clear authority, you can’t enforce consistent policies. Without single accountability, you can’t drive governance improvements. Without unified ownership, you get exactly what the data shows: scattered responsibility, gaps in coverage, and governance that doesn’t work.

The question isn’t simply who should own AI security. It’s whether single ownership is even possible for technology that operates everywhere at once.

What Runtime Risk Reveals About AI Security

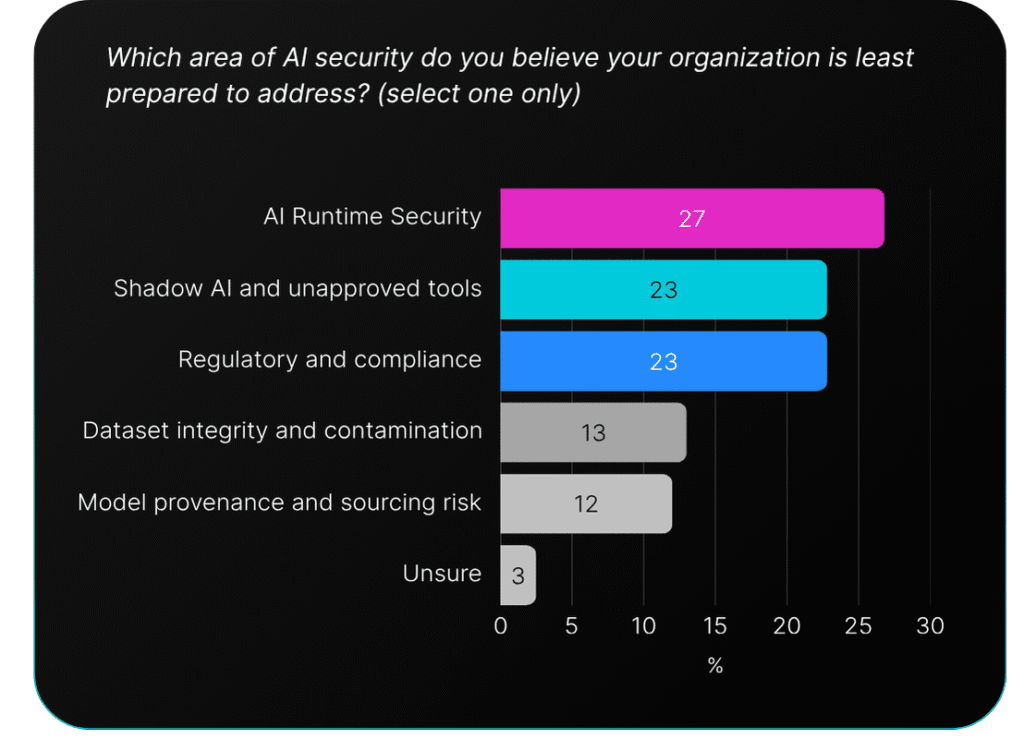

Runtime is both the most vulnerable phase and the area where organizations are least prepared. Thirty-eight percent identify it as their biggest risk area, while 27% admit it’s where they lack the most capability.

The contrast with pre-deployment concerns tells the story. Only 13% worry most about dataset integrity. Just 12% cite model provenance as their top concern. Organizations are far more concerned about what happens when AI is in use than about securing components before deployment.

This represents a break from traditional security thinking. The shift-left approach that has dominated security for years focuses on catching problems early, during development and build. With AI, organizations are telling us the real risks emerge later, during operations. The controls and processes we’ve refined for pre-deployment security don’t address what keeps security leaders awake: what happens when AI is actually running in production.

The 27% who say they’re least prepared for runtime security are being honest about a gap most organizations face. They lack visibility into AI behavior in production. They can’t detect when models drift or when outputs become unreliable. They struggle to identify prompt manipulation or adversarial inputs in real-time.

This runtime gap explains why so many expect incidents. When your biggest risk area is also where you’re least prepared, incidents become inevitable.

The AI Supply Chain Budget Priority

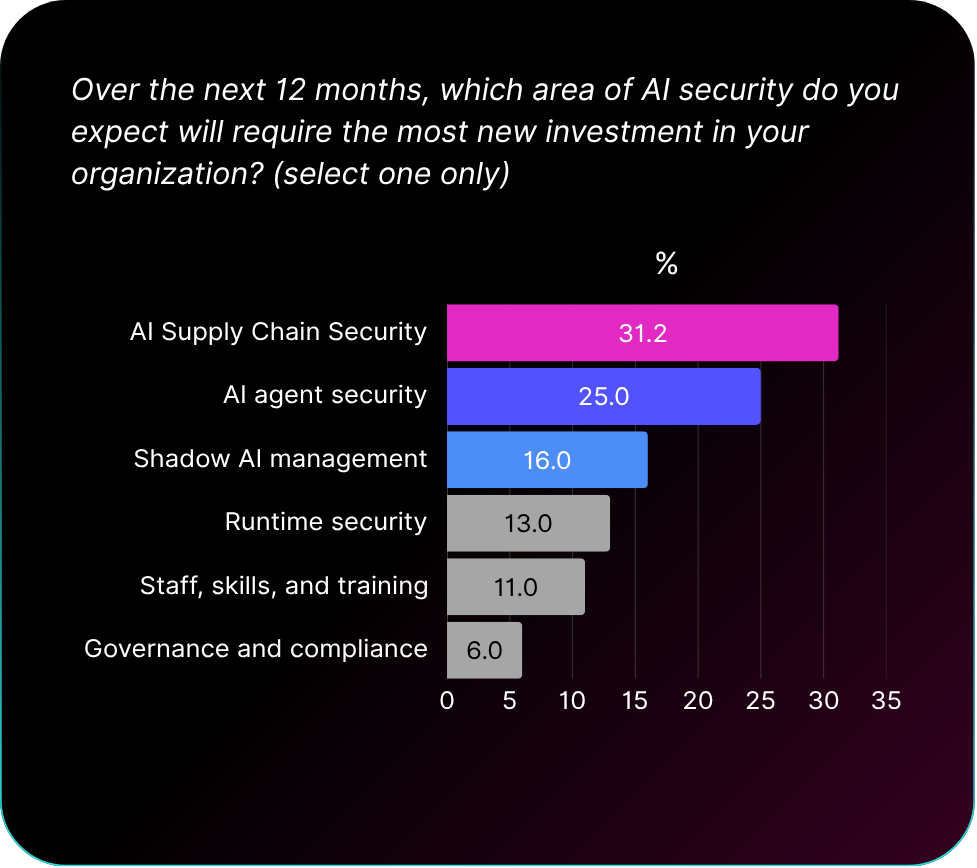

Thirty-one percent of organizations are making AI supply chain security their top investment priority over the next twelve months. This represents one of the biggest shifts in enterprise security spending in decades, signaling a move beyond isolated tool management toward comprehensive supply chain oversight that encompasses models, datasets, agents, plugins, APIs, and SaaS AI features.

The concept of AI supply chain security remains poorly defined across the industry. Most current approaches reference traditional software supply chain models, focusing on bills of materials and static inventories, or narrowly scope security to known vulnerabilities. Our survey data suggests organizations understand AI supply chains as something entirely different.

Organizations identify data sources and embeddings as their greatest AI supply chain risk (31%), followed closely by external APIs and SaaS-embedded AI features (29%). Model sourcing and provenance, which receive significant attention in traditional supply chain discussions, rank as concerns for only 13% of organizations. This disconnect between what security vendors emphasize and what organizations actually fear reveals the gap between current solutions and real needs.

AI supply chain security differs from software supply chain security because it must address components that behave dynamically during runtime. AI components create risks through their live interactions with data and users that cannot be fully assessed during pre-deployment phases. Organizations recognize this runtime dimension: while 38% identify runtime as their most vulnerable phase, an additional 27% view risks as spanning the entire AI supply chain from sourcing through runtime deployment.

The AI supply chain includes components that traditional approaches never had to address: autonomous agents that can access multiple systems, embeddings that process and potentially retain sensitive data, and APIs that enable real-time AI capabilities across enterprise applications. These components create security implications that only become fully visible when they operate in production environments with real data and user interactions.

This investment shift shows organizations grasping the true nature of AI risk and allocating resources accordingly.

The Conversation Ahead

This research provides evidence for conversations that need to happen in every organization deploying AI. It offers meaningful benchmarks for understanding where your organization stands. Are you part of the 70% struggling with governance? The 49% expecting Shadow AI incidents? The 27% least prepared for runtime security?

Knowing you’re not alone in these challenges can be the starting point for honest internal discussions about what’s actually possible versus what we wish were possible.

What this moment requires isn’t more tools or bigger budgets. Organizations making progress have accepted that AI has completely changed the nature of enterprise risk. They’re building new approaches rather than trying to retrofit existing (and often, ineffective) ones. They’re creating governance that assumes constant change, developing visibility for technology that exists everywhere at once, and preparing response capabilities for incidents that didn’t exist just three years ago.

The conversations need to include some difficult questions. Can traditional ownership models work for technology that spans every domain? How do we balance AI’s business value with risks we can’t fully control? What does security success look like when prevention is no longer guaranteed? Can legacy security vendors evolve fast enough to address AI risk, or has that ship sailed?

These findings show that the challenges you’re experiencing aren’t unique to your organization but reflect the broader reality of securing AI. The governance struggles, the ownership challenges, the runtime gaps, and the incident expectations are industry-wide patterns that require industry-wide rethinking.

My hope is that these findings spark the difficult but necessary conversations in your organization. Between security teams and business leaders about realistic risk acceptance. Between CIOs and CISOs about ownership and collaboration. Between those deploying AI and those trying to secure it.