The Third Wave of Security: Why Gen AI Demands a Ground-Up Rethink

Over the past two decades, enterprise technology has evolved in waves—each one pushing boundaries, transforming operations, and inevitably reshaping the security landscape. Security has successfully responded to the cloud revolution and navigated the complexities of SaaS adoption. Now, we stand at the precipice of a new era: Generative AI (Gen AI).

Unlike previous waves, Gen AI isn’t just about where data is stored or who hosts the software—it’s about how machines reason, create, and interact with sensitive information. And for this, legacy security models just don’t cut it. We need rapid response with ground up thinking.

A Look Back: The First Two Waves

Wave 1: SaaS Adoption

When SaaS first emerged, enterprises hesitated: “How can we secure apps we don’t even own?” Eventually, the ecosystem matured with tools like CASBs, DLP, SSO, MFA, and posture management platforms. The perimeter disappeared, but security evolved to follow users, data, and applications.

Wave 2: Cloud Explosion

Next came cloud computing and once again, hesitation was common. Trusting critical workloads to public infrastructure felt risky. But over time, the cloud proved its value, and security adapted with shared responsibility models, identity-driven access, encryption, zero trust, CSPM, CWPP and CNAPP.

Wave 3: Gen AI Has Arrived—and It’s Different

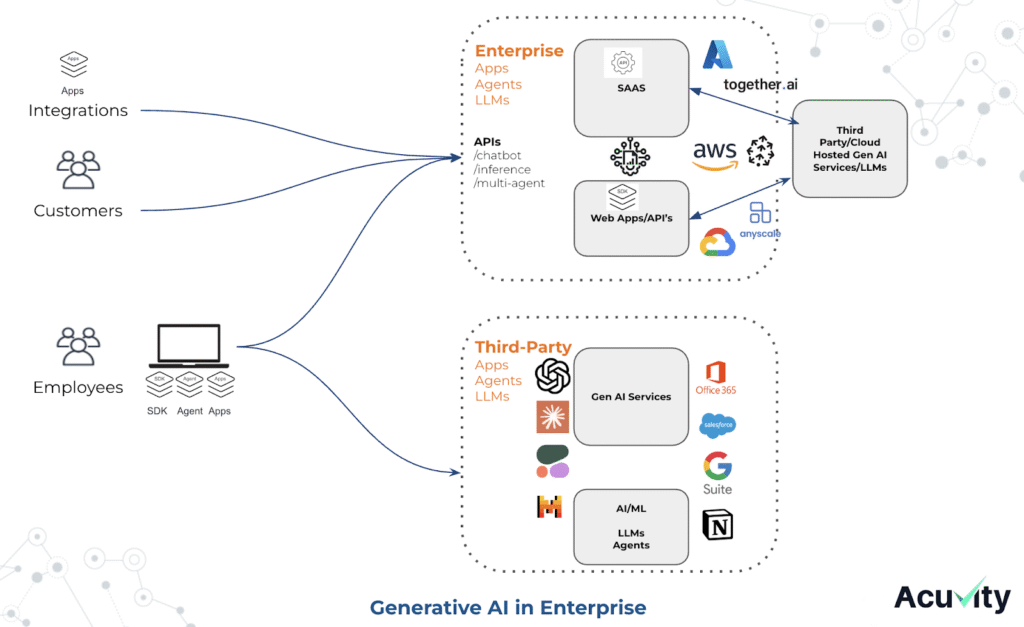

Gen AI as a technology has one of the fastest adoption cycles. While some organizations are being wary due to risks, some leaders are pushing for Gen AI adoption through the enterprise.

Generative AI is not just another tool or just SaaS —it’s a thinking assistant, a code-writer, a summarizer, a planner, a creative partner. Employees are using Gen AI to draft documents, automate workflows, generate insights, and even make decisions. It runs as SaaS, runs on your computers and even in your cloud – it’s pervasive.

Gen AI uses workflows – whether fixed or dynamic and to secure it – it’s important to understand the context and semantics.

In the meantime, employees are signing up for freemium AI services, sometimes paying individually for services and some even downloading risky applications in the name of “productivity boosts for Gen AI”

They are running models on their laptops, using them on various GPU clouds.

But here are some hard truths:

- Most organizations do not understand the AI Sprawl in their organization. Acuvity has observed more than ten thousand Gen AI services available with varied risks and this is growing at 20-50 services per week.

- Enterprises understand there are risks and that’s why they are either “Blocking All” or allowing but handing over a Gen AI safe usage policy and making it the employees problem.

- More kinds of data such as pictures of whiteboard, spreadsheets of revenues, screenshots of intellectual property among others have started to leave your organizations and are being uploaded to Gen AI services and agents and the systems you used to protect that data aren’t equipped for this world.

- Gen AI content proliferation or data infiltration is a concern as enterprises consume more code and various forms of content in the organization. We have seen even the likes of Github Copilot and Cursor shrug off problems as “not our vulnerability”

- Last, but not the least, the landscape is ever changing and so is the attack surface every day we hear. Agents were a topic of discussion and now it’s on your desktops with applications connecting to various data sources.

The Security Gaps in a Gen AI World

1. DLP Can’t Understand the Prompts

Data Loss Prevention (DLP) was designed for files, emails, and structured flows—not real-time, unstructured prompts and generated outputs. It doesn’t understand the meaning behind “write a summary of our Q2 financial report for a public press release.”, it can’t comprehend if an “image of a whiteboard with sensitive content is being uploaded” and it certainly does not understand the context where data was received through connectors.

Anecdote: Imagine the consequences of leaking this kind of information for a publicly traded company providing its previous quarters earnings for the upcoming earnings call.

2. Firewalls Are Blind to Context

Next-gen firewalls—those that go beyond IPs and ports to inspect applications – can’t analyze the semantic context of Gen AI usage. It’s impossible for them to understand the content that’s flowing in Gen AI with prompts and content.

Anecdote: Imagine an agent going rogue where an email assistant instead of summarizing an email starts forwarding your email due to the mal-content in the email it just read. The worse part is the user or organization does not even know that the email was forwarded.

3. Intent and Malcontent are the New Attack Vectors

Gen AI introduces a new form of risk: not just where the data goes, but how it is interpreted, transformed, and reused. As the models interpret prompts and content and are susceptible to attacks, context awareness is key to understanding and securing workflows.

Anecdote: Imagine your Cursor or Claude Desktop or Browser controls acting on your behalf and interacting with various data sources, sending them to LLMs – did the user do it, did the agent do it, was the user aware of what was happening?

4. Model Training – a new paradigm for Data Leaks

All Gen AI services constantly train their models to demonstrate improvements. Enterprise data can be used to train models with even the most reputed services which are not consumed with appropriate contracts and agreements.

Models are “mimicking parrots” and when trained on specific data can even reproduce the content verbatim.

Anecdote: Uploading intellectual property documents using the Freemium tier on ChatGPT is not safe. If the models are trained on these docs and someone enquires about content that is semantically similar to your documents, the answers provided can have the content from your documents.

Enter ContextIQ based Gen AI Security

Gen AI operates with multi-modal content such as text, images, documents and provides a transformation based on a natural language query – RBAC is not enough.

Deeper understanding of whether the user is accessing Google drive or its the agent on behalf of the user. Did the agent reading the email to summarize get its goal broken ? – Context is important.

To secure Gen AI, we need Context Based Security—a new paradigm that protects meaning, intent, and context, not just files and APIs. Here’s the pillars of Context Security:

- DLP++: Regex and OCR is a thing of the past; understanding the multi-modal content is required. Gen AI understands your IP, sensitive content, earnings, whiteboards, code etc – security needs to now understand the context of content along with the interactions.

- Prompt Detections and Output Controls: Detectors capable of understanding prompt injections, jailbreaks, intent hijacking, malicious prompts, harmful content, toxicity, bias, misinformation among others.

- Behavior Detections for users and agents: Detect anomalies in behaviors and privilege escalations among others.

- Risk Awareness

- Provider and Risks – Services, Agents, LLMs

- Users and risks – Locations, Teams

- Data and risk

- Context Based Access Control: Context for a Gen AI interaction is combined multiple aspects such as user, the service/agent/application in use, the data, the risks of each, the workflows – whether human or agentic.

Context Security Examples:

- Provide auditability with context aware interactions between users, agents, models, data sources and attribute actions.

- Use DLP++ to disallow sensitive uploads to risky services

- Provide user insights about risky user and agent behavior and provide root cause.

- Block risky features, tools and plugin usage such as browser and computer control.

- Detect/Block malicious intent, jailbreaks and injections which is generally performed as multiple steps.

- Block agents from privilege escalation and leverage other threats and vulnerabilities.

And do this while allowing all/groups of employees to use low risk services such as

- Sales teams can use sales and productivity agents

- Marketing teams can use typeface

- Product Managers can use beautiful.ai

And provide adherence to frameworks such as ISO 42001, NIST, MITRE and OWASP for LLM and Agents.

Managing Risks and Securely Adopting Gen AI

We at Acuvity believe the best security is transparent but has depth of coverage and reasoning for security practices.

Here’s our recommendations for enterprises:

Understand Gen AI usage with Visibility

- To start with, enterprises must have Shadow AI discovery for the usage in the organization. Shadow AI is not just about the unsanctioned services in use but also applications, agents and models.

This is achieved by having a complete inventory of the following:

- Services (Internal and External)

- Agents

- Desktop applications

- LLMs

In addition to services, we also need an understanding of plugins, tools, tiers of services.

- Enterprises must have an understanding about what kind of data is being exchanged with the Gen AI services and by what team/users/agents.

- Enterprises should be able to understand the risks that a particular usage brings and the mitigation strategies.

- Understand what users are doing and what agents are doing on behalf of the users and customers.

- A similar level of understanding is needed for applications and agents that have either been built or deployed in house.

Governance and Control

- Enterprises need information to ensure whether they should “Sanction” an application, agent or LLM.

- On having visibility, policies conformant with various frameworks should be implemented.

- Having logs, traceability and complete auditability of users, agents and workflows.

- Having Context Based Access Control (CBAC) to address

- Apply services risk based controls.

- Control tier based controls –

- Apply services risk based controls.

Example: Freemium tiers have more risks

- Control plugins/tools based controls –

Examples: Claude is great but do you want it to control your computer.

- Output based controls to see risky generated content –

Examples: Control vulnerabilities in code

- Sensitive and confidential uploads and data controls

Examples: Whether your developers use Cursor or Github Copilot or AWS Codeseeker or any other, please don’t upload secrets and passwords.

Do not upload whiteboards with sensitive content, revenue from last quarter before earnings, healthcare data, mortgage documents, designs of your chips and many other contexts.

ROI Analysis

As generative AI is and will be pervasive, we can take the approach of band aids on incumbent solutions and then stitch all the information together to understand the risks, enterprises will benefit from a unified pane of glass which provides a holistic view of everything Gen AI in your enterprise.

Summary

As a security practitioner you need to get in front of this with a sense of urgency protecting both users and applications/agents. There are more than 10,000 Gen AI apps already released and another 100 a week being created – the genie is already out of the bottle.

We’re building Gen AI security which allows for innovating responsibly with Gen AI. Done right, security can be the enabler of AI adoption.

The third wave for Gen AI will be context based security which allows your organization to understand and audit the Gen AI usage and utilize context-aware policies.