Shadow AI Management

Discover, control, and secure unsanctioned AI usage across your enterprise.

Shadow AI refers to the use of artificial intelligence tools by employees or business units without the awareness, approval, or oversight of central IT, security, or compliance teams. These unsanctioned tools, including generative AI, external APIs, plugins, or freemium services, can introduce serious risk: sensitive data may be exposed, regulatory requirements violated, decision-making may hinge on unreliable outputs, and unknown vendors or infrastructure may become part of your attack surface.

With Acuvity enterprises get continuous visibility into where and how AI tools are being used, risk assessments of those tools, and enforcement of policies to reduce exposure and ensure alignment with security and compliance requirements.

Challenges & Risks

Shadow AI introduces unseen vulnerabilities across security, compliance, and governance. Without controls, organizations face multiple serious risks:

- Sensitive or regulated data exposure via external AI tools that lack enterprise-grade protections.

- Regulatory non-compliance when AI services bypass data protection laws (e.g. GDPR, HIPAA) or internal policy requirements.

- Vendor risk from external service providers with opaque retention policies, weak security, or problematic jurisdictional practices.

- Unreliable or biased outputs from unsanctioned models used without validation, leading to poor or misleading decisions.

- Lack of visibility, oversight, or audit trails over AI tool use and data flows, making detection of misuse difficult.

- Unreliable or biased outputs from unsanctioned models used without validation, leading to poor or misleading decisions.

- Intellectual property or trade secrets risk if proprietary content is submitted or exposed to outside models.

- Expanded attack surface via browser extensions, APIs, embedded AI features or freemium tools bypassing standard security controls.

Discovery

Identifies every unsanctioned AI tool in use across teams, accounts, and data flows, giving security and compliance leaders a complete inventory.

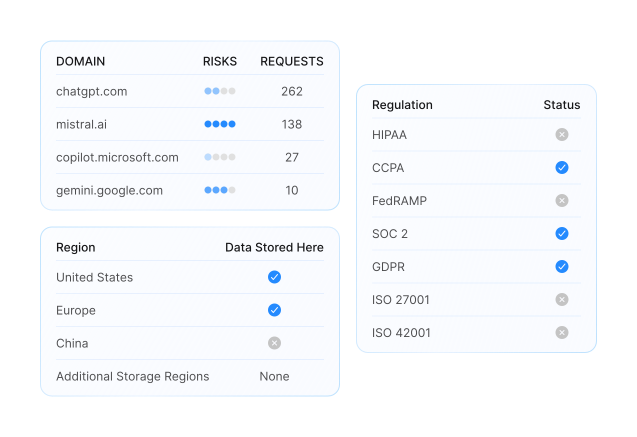

Risk Evaluation

Analyzes each tool against security, compliance, and vendor factors to surface which pose the greatest exposure.

Policy Enforcement

Applies precise controls to block unsafe tools, govern sensitive data use, and align activity with enterprise standards.

FAQShadow AI Management FAQs

What exactly is “Shadow AI”?

Shadow AI covers any use of artificial intelligence that takes place outside of sanctioned channels. That might be a marketing team using a freemium text generator with a personal login, a developer installing an IDE plugin that calls an LLM, or an employee uploading documents into a consumer chatbot. Because these uses bypass IT and security, they are invisible to governance frameworks. Acuvity addresses this by maintaining an extensive and continually updated catalog of AI tools and services. The platform correlates network traffic, user activity, and integration patterns against this catalog to identify where AI is in use, who is using it, and what data is involved. The result is not just a list of tools, but a contextual map of shadow AI activity across the enterprise.

How can I tell if these unsanctioned tools present real risk?

Risk is not uniform. A design team using a low-risk summarization plugin is different from a finance team uploading customer records to a generative chatbot. Acuvity’s Adaptive Risk Engine evaluates each discovered tool on multiple dimensions:

Data sensitivity — what types of information are being handled, such as regulated personal data or proprietary intellectual property.

Vendor posture — whether the provider has strong security controls, certifications, or is known to retain and reuse submitted data.

Integration method — whether the AI is accessed through APIs, browser extensions, embedded models, or desktop agents, since each path introduces different attack surfaces.

Usage context — who inside the company is using the tool, how often, and whether that aligns with the organization’s risk tolerance.

These factors combine into a weighted risk score. That score changes as conditions change — for example, if usage expands into a sensitive business unit, the risk rating increases automatically.

Will enforcing control over Shadow AI slow down teams or block useful tools?

Oversight doesn’t have to mean stifling adoption. Acuvity supports policy enforcement that is both granular and adaptive. For instance, if a tool is widely used but carries moderate risk, you might configure policies to allow access only from approved accounts, or restrict the categories of data that can be shared with it. If a service is deemed high risk, you can block it entirely while offering employees a safer, sanctioned alternative. Policies can also be role-based, so that sensitive departments face stricter limits while lower-risk teams have more flexibility. This approach prevents a blanket ban that drives employees to hide their usage, while still ensuring that data leakage and compliance breaches are contained.

What kinds of compliance or legal risks am I exposed to if I ignore Shadow AI?

The risks are significant and compound quickly. Data protection laws such as GDPR, CCPA, HIPAA, and PCI DSS all impose strict requirements on how personal or sensitive data is processed. If employees are entering that data into uncontrolled AI tools, the organization may be in violation without even knowing it. Contracts with customers often include clauses about how data is handled; shadow AI use can breach those obligations, opening the door to legal disputes.

Regulators and auditors increasingly expect evidence of AI governance. Without logs, audit trails, or clear policies, you cannot demonstrate compliance, which may lead to fines or reputational fallout.

Acuvity addresses this by producing an authoritative inventory of AI use, documenting activity, and enforcing controls aligned to regulatory standards so you can prove compliance if challenged.

How quickly can I see results from managing Shadow AI with Acuvity?

Time to value is nearly instant.

As soon as Acuvity is deployed, it begins correlating traffic, user activity, and tool catalogs to surface which AI services are already in use. Within the first reporting cycle, you can see which tools are active, who is using them, and how data is flowing. Risk scores are generated in parallel, giving you an immediate sense of where the biggest exposures are. From there, you can apply initial policies — for example, blocking one high-risk service while restricting data categories for another.

The effect is visible quickly: fewer unsanctioned tools, clearer oversight, and a measurable reduction in high-risk activity. Over the following weeks, you can refine policies, onboard safer alternatives, and build audit trails that improve compliance posture.