AI Risk Assessment and Exposure Audits

Discover and assess AI risks across your entire environment before they become security incidents

Organizations deploying AI tools often lack visibility into their complete AI attack surface, making it impossible to assess their true risk exposure or demonstrate security posture to stakeholders. Traditional security assessments weren’t designed for AI environments where new services, APIs, and applications emerge daily, creating blind spots that can expose sensitive data or violate compliance requirements.

Most enterprises discover their AI security gaps only after incidents occur, when employees have already shared confidential information with unauthorized AI services or when auditors identify policy violations during compliance reviews. This reactive approach leaves organizations vulnerable and unable to make informed decisions about AI security investments or policy development.

AI risk assessment requires systematic discovery and analysis of all AI touchpoints across the organization, from approved enterprise tools to shadow AI usage, combined with contextual risk scoring that considers data sensitivity, compliance requirements, and business impact. Organizations need comprehensive visibility into their AI ecosystem before they can effectively secure it or demonstrate governance maturity to regulators and stakeholders.

Challenges & Risks

Organizations struggle to understand their AI risk exposure because AI services emerge faster than security teams can track them, and traditional security tools weren’t built to handle AI-specific risks.

- Unknown AI service proliferation occurs when new AI tools and services become accessible faster than security teams can evaluate them, creating expanding attack surfaces that remain invisible to traditional network monitoring

- Existing security tool limitations emerge when firewalls, proxies, and network security appliances block or allow AI services without understanding the specific risk profiles, compliance status, or data handling practices of individual AI platforms

- Shadow AI discovery challenges prevent organizations from identifying unauthorized AI tools that employees access through approved applications like Figma, Adobe, or Microsoft Office that now embed AI capabilities

- Risk scoring complexity arises when organizations attempt to evaluate AI services across multiple dimensions including data residency, compliance certifications, security controls, and integration dependencies without standardized assessment frameworks

- Compliance gap identification becomes difficult when organizations cannot demonstrate comprehensive AI inventory and risk assessment to auditors, regulators, or internal governance committees

- Baseline establishment problems occur when organizations lack systematic methods to understand their current AI risk posture, making it impossible to measure improvement or demonstrate security maturity over time

Discovery

Identifies every unsanctioned AI tool in use across teams, accounts, and data flows, giving security and compliance leaders a complete inventory.

Risk Evaluation

Analyzes each tool against security, compliance, and vendor factors to surface which pose the greatest exposure.

Policy Enforcement

Applies precise controls to block unsafe tools, govern sensitive data use, and align activity with enterprise standards.

FAQFAQ: AI Risk Assessments

What Is AI Risk Assessment and How Does It Differ From Traditional Security Audits?

AI risk assessment involves systematically discovering and analyzing all AI services, tools, and applications that your organization can access, then evaluating the security and compliance risks associated with each one. Unlike traditional security audits that examine your internal systems and applications, AI risk assessment focuses on external AI services that employees might use and the data exposure risks they create.

Traditional security audits look at systems you own and control. They examine your servers, databases, applications, and network infrastructure to identify vulnerabilities and compliance gaps. These audits assume that you have visibility into what systems exist and how they’re configured because they’re part of your IT environment.

AI risk assessment works differently because most AI tools are external services that employees access through web browsers or embedded features in business applications. You don’t control these AI services, but employees can share your company’s sensitive data with them. The risk comes from what data employees might share and whether those AI services have adequate security controls, proper data handling practices, and compliance certifications.

Discovery Challenges make AI risk assessment particularly complex. New AI services appear constantly, and employees often start using them without IT approval. Additionally, many business applications like Adobe, Figma, and Microsoft Office have added AI features, creating hidden AI access points that traditional network monitoring might miss.

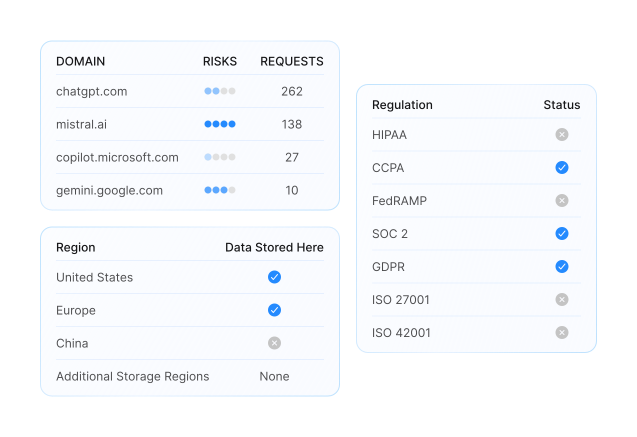

Network Security Tool Limitations create blind spots in traditional approaches. Firewalls and proxies can see that someone accessed an AI service, but they can’t determine whether that service meets your security requirements or handles data appropriately. A firewall might block or allow access to an AI service, but it doesn’t know whether that service has SOC 2 compliance, stores data in approved geographic regions, or has adequate security controls.

Risk Evaluation Complexity emerges because AI services vary widely in their security postures, compliance certifications, and data handling practices. Some AI services have enterprise-grade security controls and compliance certifications, while others are experimental services with minimal security oversight. Traditional security audits don’t provide frameworks for evaluating these external service risks systematically.

Continuous Change requires different approaches because new AI services emerge weekly, existing services add new features, and security postures change over time. Unlike traditional IT assets that change infrequently, AI risk assessment must account for a rapidly evolving landscape where yesterday’s assessment might miss today’s risks.

Organizations need AI risk assessment to understand what AI services their employees can access, which ones pose the highest risks, and where they should focus their security efforts and policy development to protect sensitive data and maintain compliance.

How Can Organizations Discover All AI Services Accessible From Their Network?

Discovering all accessible AI services requires systematic testing that goes beyond traditional network monitoring because AI services operate through standard web protocols and often embed within approved business applications. Organizations need active discovery methods that can identify both obvious AI tools and hidden AI capabilities within existing software.

Active Network Scanning represents the most comprehensive discovery approach. Rather than waiting for employees to access AI services naturally, active scanning systematically tests connectivity to known AI domains and services from your network environment. This approach can quickly determine which AI services are accessible through your current security controls, which ones are blocked, and which ones your existing tools might be allowing without proper evaluation.

Embedded AI Discovery becomes critical because many business applications now include AI features that aren’t obvious from network traffic analysis. Applications like Adobe Creative Suite, Microsoft Office, Figma, and Zoom have integrated AI capabilities that employees can access within approved software. Traditional discovery methods might see normal application usage while missing AI interactions happening within those applications.

Systematic Testing Methodology involves testing connectivity to thousands of known AI services, APIs, and domains to create a complete picture of your AI attack surface. This includes testing major AI platforms like OpenAI, Anthropic, and Google, but also smaller specialized AI services, open source AI tools, and emerging platforms that employees might discover independently.

Security Control Evaluation during discovery provides insights into how your existing security tools handle different AI services. Testing can reveal whether your firewall properly categorizes AI services, whether your proxy applies appropriate policies, and whether your data loss prevention tools can monitor AI interactions effectively. This evaluation helps identify gaps between your security assumptions and actual protection levels.

Compliance and Risk Profiling as part of discovery involves analyzing discovered AI services for security certifications, data handling practices, geographical data storage, and compliance frameworks. Rather than just identifying which services are accessible, comprehensive discovery includes evaluating whether those services meet your organization’s security and compliance requirements.

Documentation and Baseline Creation ensures that discovery results provide actionable intelligence for security teams. Effective discovery creates detailed inventories of accessible AI services, their risk profiles, security controls, and accessibility status. This baseline enables organizations to track changes over time and prioritize security investments.

Automated vs. Manual Discovery approaches each have advantages. Automated discovery can test thousands of AI services quickly and consistently, providing comprehensive coverage and regular updates. Manual discovery allows for deeper analysis of specific high-risk services and can identify subtle AI integrations that automated tools might miss.

Organizations using systematic AI service discovery often find significant gaps between their assumptions about AI accessibility and actual exposure, making discovery a critical first step in developing effective AI security strategies.

Why Do Traditional Network Security Tools Miss AI-Specific Risks?

Traditional network security tools were designed for different types of applications and threats, making them inadequate for identifying and managing AI-specific risks. These tools can see AI traffic at the network level but lack the contextual understanding and analysis capabilities needed to assess AI security risks properly.

Application Identification Limitations create the primary gap between traditional security tools and AI risk management. Network security appliances like firewalls can identify that someone is connecting to OpenAI or Anthropic, but they cannot determine what type of AI interaction is occurring, what data is being shared, or whether the usage violates organizational policies. Traditional application identification relies on network signatures and protocols, while AI risk assessment requires understanding conversational content and data sharing patterns.

API-Level Complexity makes AI traffic particularly challenging for traditional tools to analyze. AI interactions typically involve multiple API calls, with sensitive information potentially appearing in any part of the conversation flow. A single AI interaction might involve dozens of API requests, and the actual data sharing might occur several calls into the sequence. Traditional network tools that examine individual packets or connections cannot reconstruct these complex interaction patterns to identify policy violations or data exposure.

Dynamic Content Analysis Requirements exceed the capabilities of most network security tools. AI conversations involve natural language that requires content analysis to determine risk levels. Traditional tools might flag certain file types or specific data patterns, but they cannot analyze conversational prompts to determine whether an employee is sharing confidential business information, customer data, or intellectual property through seemingly innocent questions.

Service Evolution and Feature Changes happen faster than traditional security tools can adapt. When AI services add new features, change their API structures, or modify their data handling practices, traditional tools often continue applying outdated rules or classifications. AI services evolve weekly, while traditional security tool updates typically occur monthly or quarterly, creating persistent gaps in coverage and accuracy.

Context-Dependent Risk Assessment requires understanding that the same AI interaction might be appropriate or inappropriate depending on user roles, data classification, and business context. Traditional network tools apply uniform policies regardless of context, while AI risk management requires understanding who is using AI tools, what type of data they’re sharing, and whether that usage aligns with organizational policies and compliance requirements.

Multi-Modal Data Handling presents challenges that traditional tools weren’t designed to address. AI services increasingly handle documents, images, audio, and other content types that can contain sensitive information in ways that traditional data loss prevention tools cannot analyze effectively. An employee might upload a document containing sensitive information to an AI service, but traditional tools might only see the file transfer without understanding the AI’s subsequent processing and potential data exposure.

Compliance and Audit Requirements for AI usage often require detailed logging and analysis capabilities that traditional network tools cannot provide. Demonstrating AI usage compliance requires understanding user intent, data sensitivity, business justification, and policy adherence in ways that network-level monitoring cannot capture.

Organizations relying solely on traditional network security tools for AI risk management often discover significant blind spots when they implement AI-specific monitoring and analysis capabilities.

How Should Organizations Prioritize AI Security Risks Once They're Identified?

Risk prioritization for AI services requires balancing multiple factors including data sensitivity, compliance requirements, service security postures, and business impact. Organizations need systematic approaches to evaluate discovered AI risks and allocate security resources effectively rather than attempting to address all risks simultaneously.

Data Sensitivity Assessment forms the foundation of AI risk prioritization. AI services that could access regulated data like personally identifiable information, financial records, health information, or confidential business data represent higher priorities than services limited to public information. Organizations should classify their data and understand which AI services employees might use with different data types to focus protection efforts appropriately.

Compliance Impact Evaluation helps prioritize risks that could result in regulatory violations or audit failures. AI services used in regulated industries or with regulated data types require immediate attention, particularly if they lack appropriate compliance certifications or data handling controls. Services that could impact GDPR, HIPAA, PCI DSS, or other regulatory compliance deserve higher priority than those with minimal compliance implications.

Service Security Posture Analysis involves evaluating the security controls, certifications, and data handling practices of discovered AI services. Services with strong security certifications, clear data handling policies, and enterprise-grade controls pose lower risks than experimental services with minimal security oversight. Organizations should prioritize addressing access to high-risk services while potentially allowing continued use of well-secured services with appropriate controls.

Business Criticality and Usage Patterns affect risk prioritization because widely used AI services or those critical to business operations require different approaches than rarely used experimental tools. High-usage AI services with poor security postures create greater overall risk exposure than little-used services with similar security issues. Understanding actual usage patterns helps focus security efforts where they’ll have the greatest impact.

Existing Control Effectiveness assessment determines which discovered risks are already adequately managed versus those requiring immediate attention. Some AI services might be accessible through your network but already subject to appropriate data loss prevention, access controls, or monitoring capabilities. Prioritizing gaps in existing controls helps optimize security resource allocation.

Attack Surface and Integration Complexity considerations factor in how AI services connect to your environment and what additional risks they might introduce. AI services that integrate deeply with your business applications or have extensive API access create different risk profiles than standalone services with limited integration capabilities. Services that could provide pathways for lateral movement or additional compromise deserve higher priority attention.

Risk Tolerance and Business Context influence prioritization decisions because organizations in different industries or with different risk profiles may appropriately prioritize the same risks differently. A financial services company might prioritize any AI service that could access customer data, while a technology company might focus more heavily on AI services that could access intellectual property or source code.

Remediation Feasibility affects practical prioritization because some AI risks can be addressed quickly through policy or technical controls, while others might require extensive user education, business process changes, or technology investments. Prioritizing quick wins alongside longer-term strategic initiatives helps demonstrate progress while building comprehensive AI security programs.

Effective AI risk prioritization creates actionable roadmaps that help security teams focus their efforts on the most critical risks while building systematic approaches to managing their organization’s AI attack surface comprehensively.

What Should an AI Risk Assessment Report Include to Be Actionable for Security Teams?

An actionable AI risk assessment report must provide security teams with specific, prioritized findings and clear recommendations for addressing discovered risks. The report should translate technical discoveries into business context while providing sufficient detail for security teams to develop implementation plans and demonstrate progress to stakeholders.

Executive Summary with Business Impact should lead the report with clear explanations of what AI services were discovered, which ones pose the highest risks, and what business impacts could result from these risks. This section must translate technical findings into business language that executives and stakeholders can understand, focusing on potential data exposure, compliance violations, and operational risks rather than technical details.

Complete AI Service Inventory provides the detailed foundation that security teams need to understand their AI attack surface. This inventory should list every discovered AI service, its accessibility status, security certifications, compliance posture, and risk rating. The inventory should distinguish between services that are blocked, allowed, or unknown to existing security controls, helping teams understand where their current protections are effective or inadequate.

Risk Prioritization Matrix organizes discovered risks by severity, business impact, and remediation complexity to help security teams focus their efforts appropriately. High-risk services that could access sensitive data should be clearly distinguished from lower-risk services that pose minimal exposure. The prioritization should consider both immediate threats and longer-term strategic risks.

Gap Analysis of Existing Controls identifies where current security tools and policies are providing adequate protection versus where additional controls are needed. This analysis should examine firewall rules, proxy policies, data loss prevention configurations, and access controls to determine which AI services are properly managed and which ones create security gaps.

Specific Remediation Recommendations provide actionable steps that security teams can implement immediately, in the short term, and as part of longer-term strategic initiatives. Recommendations should include specific technical controls, policy changes, user education requirements, and vendor evaluation criteria. Each recommendation should specify expected outcomes, resource requirements, and implementation timelines.

Compliance and Regulatory Implications section addresses how discovered AI risks affect the organization’s compliance posture and regulatory obligations. This section should identify services that could impact specific regulations, highlight compliance gaps that require immediate attention, and provide documentation suitable for audit purposes.

Baseline Metrics and Measurement Criteria establish how the organization will track improvement over time and demonstrate the effectiveness of security investments. The report should define metrics for AI service discovery, risk reduction, policy compliance, and security control effectiveness that can be measured consistently in future assessments.

User Education and Policy Development Guidance helps organizations address the human factors that contribute to AI risk exposure. This section should identify common user behaviors that create risks, recommend policy changes or additions, and suggest training programs that can reduce risky AI usage while supporting legitimate business needs.

Technology Integration Recommendations provide specific guidance on how to integrate AI risk management into existing security tools and processes. This might include recommendations for security information and event management integration, policy engine configurations, or additional security tools that can provide ongoing AI risk monitoring and control.

The most actionable AI risk assessment reports provide clear roadmaps that help security teams move from discovery to implementation, with specific priorities, timelines, and success criteria that enable systematic improvement of the organization’s AI security posture.