AI Policy Enforcement

Enforce consistent AI usage policies across your entire organization

Organizations need clear, enforceable policies governing how employees use AI tools, what data can be shared with AI applications, and which AI services are approved for business use. Without proper enforcement mechanisms, even well-crafted AI policies remain ineffective guidelines that users can easily bypass or ignore.

Traditional policy enforcement approaches struggle with AI because these tools operate differently than conventional applications. AI interactions happen through natural language conversations, involve unstructured data, and often blur the lines between personal and professional use. Employees might share sensitive information through seemingly innocent prompts, use unauthorized AI tools for work tasks, or inadvertently violate data handling policies through AI-generated outputs.

Effective AI policy enforcement requires understanding the context of AI interactions, analyzing the content being shared, and implementing controls that can operate in real-time across diverse AI applications and use cases. Organizations need enforcement mechanisms that protect against policy violations while preserving the productivity benefits that AI tools provide to their workforce.

Challenges & Risks

Organizations implementing AI policy enforcement face several operational and technical obstacles that can undermine even well-designed governance frameworks.

- Shadow AI usage bypasses established approval processes when employees adopt AI tools independently, creating policy violations that organizations cannot detect through conventional network or application monitoring

- Data classification becomes complex when employees share sensitive information through conversational prompts that may appear benign but contain confidential business data, customer information, or intellectual property

- Cross-platform policy inconsistency emerges when different AI applications have varying security controls and usage restrictions, making it difficult to maintain uniform policy enforcement across the AI ecosystem

- Real-time enforcement challenges occur because AI interactions happen at conversational speed, requiring policy controls that can analyze content and context instantly to prevent violations before they occur

- User behavior circumvention develops as employees find creative ways to work around AI restrictions, such as rephrasing prohibited prompts or using personal accounts to access restricted AI services

- Audit and compliance gaps appear when organizations cannot demonstrate consistent policy enforcement across AI interactions, creating risks for regulatory violations and internal governance failures

Enforce AI Policies with Acuvity

Ready to see how Acuvity’s policy enforcement works?

Real-Time Content Analysis and Policy Enforcement

Analyze and enforce policies at the moment of AI interaction

Acuvity monitors AI conversations in real-time, analyzing prompts and responses for policy violations, sensitive data exposure, and compliance issues while automatically blocking or modifying interactions that violate organizational policies.

Role-Based Access Controls and Contextual Permissions

Enforce AI usage policies based on user roles and data sensitivity

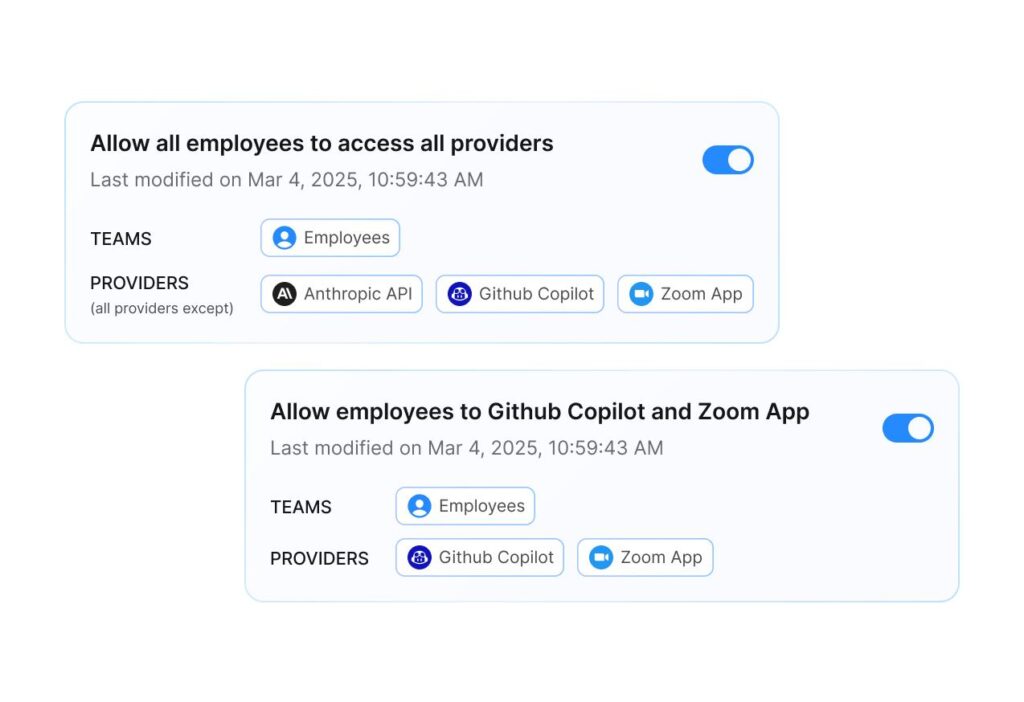

Implement dynamic access controls that adapt based on user identity, data classification, and business context, ensuring employees only access AI tools and capabilities appropriate for their roles and responsibilities.

Comprehensive Audit Trails and Compliance Reporting

Maintain detailed records for governance and compliance

Generate complete audit trails of AI interactions, policy enforcement decisions, and compliance status with automated reporting capabilities that support regulatory requirements and internal governance processes.

FAQAI Policy Enforcement FAQs

What Is AI Policy Enforcement and Why Do Traditional IT Controls Fall Short?

AI policy enforcement refers to the systematic implementation and monitoring of organizational rules governing artificial intelligence usage, data sharing, and acceptable AI application deployment across the enterprise. Unlike traditional IT policy enforcement that relies on network controls, application permissions, and device management, AI policy enforcement must address the unique challenges of natural language interactions, unstructured data flows, and dynamic content generation.

Traditional IT controls fail with AI applications because they were designed for predictable, structured interactions with well-defined data inputs and outputs. Network security tools can detect when someone accesses an AI service but cannot determine whether the interaction violates data handling policies. Endpoint protection can monitor file transfers but cannot analyze conversational prompts that might contain sensitive information. Application controls can restrict access to specific AI tools but cannot prevent policy violations within approved applications.

The Content Analysis Challenge represents a fundamental limitation of traditional controls. AI interactions involve natural language conversations where sensitive information can be embedded within seemingly innocent prompts. An employee might ask an AI tool to “help improve this customer feedback” while inadvertently sharing personally identifiable information, financial data, or confidential business details. Traditional security tools see only the network traffic to an approved AI service, missing the actual policy violation occurring within the conversation content.

Dynamic Context Requirements create additional enforcement challenges. AI policy violations often depend on context that traditional tools cannot assess. The same prompt might be acceptable from one user in a specific role but inappropriate from another user or in a different business context. Effective enforcement requires understanding user identity, data classification, business purpose, and organizational policies in real-time.

Multi-Modal Data Complexity emerges as organizations use AI tools that process documents, images, code, and other content types. Traditional data loss prevention tools may flag a spreadsheet being uploaded to an AI service but cannot analyze whether the AI’s subsequent processing and output generation creates new policy violations or data exposure risks.

Behavioral Pattern Analysis becomes necessary because AI policy violations often involve subtle patterns of usage that develop over time rather than single, obvious infractions. An employee might gradually share more sensitive information with AI tools, test the boundaries of acceptable usage, or find creative ways to circumvent restrictions. Traditional controls lack the behavioral analysis capabilities needed to detect these evolving violation patterns.

Organizations need policy enforcement approaches specifically designed for AI environments that can analyze content, understand context, monitor behavioral patterns, and implement controls that operate at the speed and complexity of AI interactions while maintaining business productivity.

How Can Organizations Implement Granular AI Usage Policies Across Different Roles and Departments?

Implementing granular AI usage policies requires understanding that different organizational roles have varying data access rights, business responsibilities, and acceptable risk profiles when using AI tools. Effective granular policy implementation goes beyond simple allow/block decisions to create contextual controls that adapt based on user identity, data sensitivity, and business purpose.

Role-Based Policy Framework forms the foundation of granular AI governance. Marketing teams might be permitted to use AI tools for content generation with customer data but restricted from accessing financial information. Engineering teams might have broader access to code analysis AI tools but face restrictions on sharing proprietary algorithms or architectural details. Executive teams might access AI tools for strategic analysis while facing enhanced controls around confidential merger and acquisition information.

Data Classification Integration enables policies that adapt based on information sensitivity rather than relying solely on user roles. The same AI tool might be fully accessible for public information, restricted for internal business data, and completely blocked for confidential or regulated information. Effective granular policies automatically classify content as users interact with AI tools, applying appropriate restrictions in real-time based on data sensitivity levels.

Contextual Decision Making allows policies to consider multiple factors simultaneously when determining whether to allow, restrict, or modify AI interactions. A sales representative accessing customer relationship management data through an AI tool might face different restrictions based on their specific customer assignments, the time of day, their physical location, and the type of information being processed.

Dynamic Permission Adjustment provides the flexibility to modify AI access rights based on changing business needs, project assignments, or risk assessments. Organizations can temporarily grant enhanced AI tool access for specific projects while automatically reverting to standard permissions when those projects conclude. This dynamic approach prevents the accumulation of excessive permissions that often occurs with static access control systems.

Cross-Platform Policy Consistency ensures that granular controls apply uniformly across different AI tools and services. An employee restricted from sharing financial data through one AI application should face the same restrictions across all approved AI tools. This consistency requires centralized policy management that can translate organizational rules into specific controls for different AI platforms and services.

Audit and Compliance Integration provides the detailed logging and reporting necessary to demonstrate policy compliance and investigate potential violations. Granular policies generate detailed audit trails that show not just what actions were taken but also the policy logic that determined whether those actions were appropriate based on user roles, data classification, and organizational context.

Exception Handling and Escalation mechanisms allow for business flexibility while maintaining policy integrity. When business needs require temporary policy exceptions, granular systems can provide controlled overrides with appropriate approval workflows, enhanced monitoring, and automatic expiration of temporary permissions.

Organizations implementing granular AI policies must balance security requirements with business productivity, ensuring that policy controls enable rather than impede legitimate AI usage while maintaining appropriate protection for sensitive information and compliance obligations.

What Are the Key Challenges in Monitoring and Auditing AI Policy Compliance?

Monitoring and auditing AI policy compliance presents unique challenges because AI interactions involve natural language conversations, unstructured data processing, and dynamic content generation that traditional audit approaches struggle to assess effectively. Organizations must develop specialized monitoring capabilities that can analyze conversational content, track behavioral patterns, and provide comprehensive audit trails for AI governance.

Content Analysis at Scale represents the primary technical challenge in AI policy monitoring. Traditional audit systems examine structured transactions, file transfers, and database queries, but AI policy compliance requires analyzing conversational prompts, document uploads, and generated responses for policy violations. This analysis must happen in real-time across potentially thousands of daily AI interactions while accurately identifying sensitive information, inappropriate content, and policy-violating behaviors.

Behavioral Pattern Recognition becomes essential because AI policy violations often develop gradually rather than occurring as single, obvious events. An employee might progressively test policy boundaries, gradually share more sensitive information, or develop workarounds for established restrictions. Effective monitoring systems must establish baseline usage patterns for individual users and detect anomalies that might indicate intentional policy circumvention or inadvertent compliance drift.

Multi-Platform Correlation challenges organizations using diverse AI tools and services. Policy violations might span multiple platforms, with users sharing information through one AI tool and using outputs from another service in ways that collectively violate organizational policies. Comprehensive monitoring requires correlating activities across different AI applications, maintaining user attribution, and understanding how information flows between different AI interactions.

Context-Dependent Compliance Assessment creates complexity because the same AI interaction might be compliant or non-compliant depending on user roles, data classification, business purpose, and timing. Monitoring systems must understand organizational context, evaluate interactions against role-specific policies, and account for legitimate exceptions or temporary permission modifications.

Privacy and Employee Relations Considerations emerge as organizations balance comprehensive monitoring with employee privacy expectations and workplace trust. AI policy monitoring necessarily involves analyzing employee conversations with AI tools, which can include personal information, creative work, and confidential business communications. Organizations must implement monitoring approaches that protect employee privacy while providing adequate oversight for policy compliance.

Evidence Collection and Investigation requires specialized capabilities for AI-related policy violations. Traditional incident investigation focuses on system logs, network traffic, and file access records. AI policy investigations must preserve conversational context, analyze prompt and response patterns, and maintain chain of custody for natural language evidence that might be required for disciplinary actions or legal proceedings.

Regulatory and Compliance Reporting becomes complex when AI interactions affect regulated data or business processes. Organizations must demonstrate to auditors and regulators that their AI usage complies with industry standards, data protection requirements, and internal governance policies. This demonstration requires detailed audit trails, policy enforcement evidence, and statistical reporting on AI policy compliance rates.

Performance and Scalability considerations affect monitoring system design as AI usage grows throughout the organization. Real-time content analysis, behavioral monitoring, and comprehensive logging create significant computational and storage requirements. Monitoring systems must scale efficiently while maintaining analysis accuracy and response times that don’t impede business productivity.

Successful AI policy monitoring requires integrated approaches that combine automated content analysis, behavioral monitoring, manual review processes, and comprehensive audit reporting to provide organizations with the visibility and control necessary for effective AI governance.

How Should Organizations Handle AI Policy Violations and Incident Response?

AI policy violations require specialized incident response procedures that account for the unique characteristics of conversational AI interactions, the potential for sensitive data exposure, and the need to balance security concerns with business continuity. Organizations must develop response protocols that can quickly assess violation severity, contain potential damage, and prevent recurrence while maintaining employee productivity and trust.

Violation Classification and Severity Assessment forms the critical first step in AI incident response. Minor violations might involve using unauthorized AI tools for non-sensitive tasks, while severe violations could include sharing regulated data with external AI services or attempting to manipulate AI tools to bypass security controls. Response procedures must quickly categorize violations based on data sensitivity, potential impact, user intent, and regulatory implications to ensure appropriate response measures.

Immediate Containment and Access Control procedures must account for the real-time nature of AI interactions and the potential for ongoing data exposure. Unlike traditional security incidents that might involve isolating compromised systems, AI policy violations often require immediately suspending user access to specific AI tools, blocking certain types of prompts or content, or implementing enhanced monitoring for particular users or data types. Containment decisions must balance security concerns with business continuity needs.

Data Impact Assessment and Recovery becomes complex when violations involve AI tools that have processed, analyzed, or generated content based on sensitive information. Organizations must determine what information might have been exposed, whether AI-generated outputs contain sensitive data derivatives, and what steps are necessary to prevent further data compromise. This assessment often requires analyzing conversational histories, understanding AI processing capabilities, and evaluating potential data retention by external AI services.

User Communication and Education requires careful handling because AI policy violations often result from misunderstanding rather than malicious intent. Many employees may not fully understand how their AI interactions could violate organizational policies or create security risks. Incident response should include educational components that help users understand policy requirements while avoiding punitive approaches that might discourage legitimate AI usage or honest reporting of potential violations.

Documentation and Evidence Preservation must capture the specific nature of AI policy violations for investigation, disciplinary procedures, and policy improvement. This includes preserving conversational content, understanding user intent, documenting the business context of AI usage, and maintaining records that demonstrate the organization’s policy enforcement efforts for regulatory and legal purposes.

Root Cause Analysis and Policy Improvement should examine whether violations result from unclear policies, inadequate training, insufficient technical controls, or legitimate business needs that current policies don’t adequately address. AI policy violations often reveal gaps in organizational understanding of AI capabilities, employee workflow requirements, or the practical implementation of governance frameworks.

Regulatory Notification and Compliance Response becomes necessary when AI policy violations involve regulated data or business processes. Organizations must understand their notification obligations, prepare appropriate documentation for regulatory inquiries, and demonstrate their policy enforcement and incident response capabilities. This is particularly important in industries with strict data handling requirements or where AI decisions affect customer rights or business operations.

Prevention and Control Enhancement should leverage violation patterns to improve policy design, enhance monitoring capabilities, and strengthen user education programs. Organizations should analyze violation trends to identify common policy confusion points, technical control gaps, and areas where additional automation or user guidance might prevent future incidents.

Stakeholder Communication and Reputation Management may be required for significant violations that could affect customer trust, business relationships, or organizational reputation. AI policy violations can raise concerns about data handling practices, business ethics, and organizational competence in managing emerging technologies.

Effective AI policy violation response balances immediate security needs with long-term organizational learning, ensuring that incident response strengthens rather than undermines the organization’s overall AI governance maturity and employee engagement with policy requirements.

What Role Does Real-Time Policy Enforcement Play in AI Governance?

Real-time policy enforcement represents a critical evolution in AI governance because traditional after-the-fact monitoring and remediation approaches cannot adequately protect against the immediate and potentially irreversible consequences of AI policy violations. When employees interact with AI tools through natural language conversations, policy violations can result in instant data exposure, inappropriate content generation, or compliance breaches that cannot be undone after detection.

Immediate Risk Prevention distinguishes real-time enforcement from traditional audit-and-remediate approaches. When an employee attempts to share sensitive customer information with an AI tool, real-time enforcement can detect the sensitive content, block the interaction, and provide immediate feedback about policy requirements. This prevents the data exposure rather than simply documenting it for later investigation. The conversational nature of AI interactions makes this immediate intervention essential because once information is shared with an AI service, organizations may have limited control over data retention, processing, or potential exposure.

Contextual Decision Making enables real-time enforcement systems to make nuanced policy decisions based on multiple factors including user identity, data classification, business context, and risk assessment. Rather than applying simple allow/block rules, sophisticated real-time enforcement can modify interactions by redacting sensitive information, providing alternative AI tool recommendations, or requiring additional approvals for high-risk activities while allowing legitimate business use to continue.

Educational Integration and User Guidance transforms policy enforcement from a punitive control mechanism into a learning opportunity. Real-time systems can provide immediate feedback when users approach policy boundaries, explain why certain interactions are restricted, and suggest alternative approaches that achieve business objectives while maintaining compliance. This educational component helps build organizational AI literacy while preventing violations.

Adaptive Policy Application allows real-time enforcement to account for changing business contexts, temporary permission modifications, and evolving risk assessments. During merger and acquisition activities, for example, enforcement systems might automatically apply enhanced restrictions on AI interactions involving confidential business information while relaxing controls for routine operational tasks. This adaptability ensures that policy enforcement supports rather than impedes legitimate business activities.

Content Analysis and Modification capabilities enable real-time systems to analyze conversational content, identify potential policy violations, and modify interactions to maintain compliance while preserving business value. This might involve automatically redacting sensitive information from prompts, filtering AI-generated responses to remove inappropriate content, or providing sanitized versions of business information that can safely be used with AI tools.

Integration with Business Workflows ensures that real-time enforcement operates seamlessly within established business processes rather than creating additional friction or administrative overhead. Effective real-time systems integrate with identity management, data classification, and business application workflows to provide policy enforcement that feels natural and supportive rather than obstructive.

Audit Trail Generation provides comprehensive logging of policy enforcement decisions, user interactions, and business context for compliance reporting, investigation, and policy improvement. Real-time systems must maintain detailed records of enforcement actions while protecting employee privacy and maintaining system performance.

Performance and Scalability Considerations require real-time enforcement systems to operate at conversational speed across potentially thousands of simultaneous AI interactions without creating delays that impede business productivity. This technical challenge requires sophisticated content analysis, efficient policy engines, and scalable infrastructure that can maintain response times measured in milliseconds rather than seconds.

Risk-Based Enforcement Prioritization allows real-time systems to focus enforcement attention on the highest-risk interactions while providing streamlined experiences for routine, low-risk AI usage. This risk-based approach ensures that security controls are proportionate to actual threats and business impact rather than applying uniform restrictions that might unnecessarily constrain legitimate business activities.

Real-time policy enforcement represents a fundamental shift from reactive to proactive AI governance, enabling organizations to prevent policy violations rather than simply detecting and responding to them after the fact, thereby providing more effective protection for sensitive information while supporting productive AI adoption across the enterprise.