AI Governance and Compliance

Accountability, oversight, and audit readiness for enterprise AI

AI Governance and Compliance refers to the structures that keep AI activity aligned with regulatory frameworks, contracts, and internal policies. Many organizations have deployed AI broadly, but few have governance programs that operate at the same scale. Policies often exist on paper but are not embedded in day-to-day workflows, leaving compliance gaps. Regulators and industry bodies now expect vendor oversight, clear accountability, traceability, and audit-ready records, with alignment to standards such as the EU AI Act and NIST AI RMF.

With Acuvity, enterprises gain visibility into AI use, assign responsibility for compliance, monitor third-party providers, and maintain traceable logs that adapt as regulations and vendor practices evolve.

Challenges & Risks

Regulation, oversight, and policy often lag behind AI adoption. When governance is weak or fragmented, serious problems can follow.

- Many organizations have deployed AI broadly but still lack formal governance programs, leaving oversight inconsistent.

- Policies often stay on paper instead of becoming part of daily workflows, which means tools, decisions, or vendor integrations happen without aligned compliance.

- Vendors and third parties introduce risk: gaps in how they handle data, changing contract terms, jurisdictional issues, or unclear data practices can violate regulation or internal policy.

- Without clear accountability and traceable records, it’s hard to demonstrate compliance during audits, investigations, or regulator inquiries. Missing logs, unclear ownership, or inconsistent documentation all weaken risk posture.

- As usage scales, regulation changes, and vendor behavior shifts, static governance policies get outdated, producing blind spots or compliance gaps.

Automated Policy Enforcement

Acuvity connects to identity systems and applies rules dynamically, controlling who can use AI tools, what data they can handle, and how policies are enforced across the enterprise.

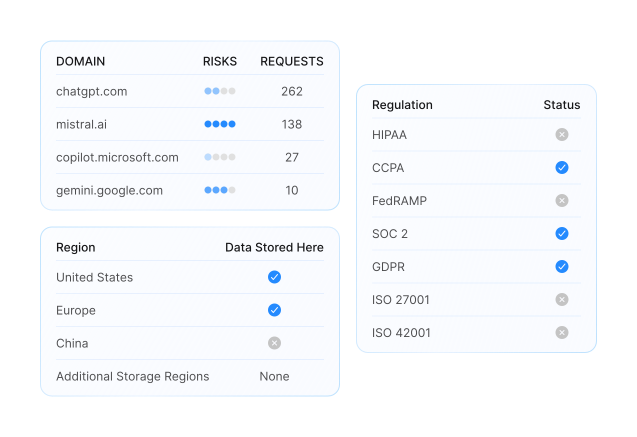

Continuous Vendor Oversight

Acuvity evaluates external AI services automatically, monitoring how they handle data, retention, and jurisdiction. High-risk providers are flagged in real time so their use can be restricted before exposure occurs.

Defensible Audit for AI

Acuvity generates audit-ready records of AI activity automatically, capturing inputs, outputs, and enforcement actions. These logs provide defensible evidence during regulatory reviews, audits, or legal proceedings.

FAQAI Governance and Compliance FAQs

What does AI governance actually cover in an enterprise?

AI governance spans the policies, controls, and oversight applied to AI systems across their lifecycle. It includes knowing what AI tools are in use, who is responsible for their operation, how inputs and outputs are handled, how vendors manage data, and how activity is logged for audits. Governance is what allows an enterprise to demonstrate compliance with laws like GDPR or HIPAA, satisfy customer contracts, and withstand regulator or auditor scrutiny.

Why is AI governance different from traditional IT compliance?

Traditional IT compliance focuses on infrastructure and data systems that are relatively stable. AI introduces dynamic risk — models update, vendors change terms, and employees adopt tools quickly. Governance for AI must be adaptive, not static. It requires continuous monitoring of tools and vendors, automated enforcement of policies, and records that evolve as regulations change. Without that adaptability, organizations risk blind spots and outdated compliance controls.

How does Acuvity support regulatory alignment (GDPR, HIPAA, PCI, EU AI Act, NIST AI RMF)?

Acuvity maps AI activity to existing regulatory frameworks by monitoring where sensitive data is used, how third-party vendors handle retention and jurisdiction, and whether usage aligns with organizational policies. It enforces controls in real time, such as blocking PII from leaving the enterprise or restricting high-risk vendors. It also produces audit-ready records that can be presented as evidence of compliance to regulators or auditors.

What makes a Defensible Audit important for AI governance?

Regulators, auditors, and customers don’t just want policies written down; they want evidence that policies were enforced. A Defensible Audit provides that evidence. With Acuvity, every input, output, and enforcement action is logged automatically.

If challenged, the organization can show exactly what data was entered, what result was generated, and what controls were applied. This ability to reconstruct AI use makes compliance programs credible and legally defensible.

How quickly can an enterprise establish AI governance with Acuvity?

Acuvity begins producing results almost immediately. Discovery shows which AI systems are in use across teams and vendors. Risk scoring highlights the most urgent compliance gaps. Policy enforcement applies controls automatically, so unsafe behaviors are stopped in real time. Audit logs are generated as soon as the system is in place, giving the enterprise defensible evidence from day one. Over time, policies and oversight adapt automatically as new regulations, vendors, and use cases emerge.