“If you cannot measure it, you cannot manage it.” – Peter Drucker

If an AI security threat isn’t visible, does that mean it’s nothing to worry about? It’s like a riptide lurking silently below the surface of seemingly calm waters, capable of pulling even the most experienced swimmers under.

The truth is, what you don’t see can — and will — hurt you. Just like that riptide, hidden vulnerabilities in AI systems and unintentional misuse of these tools can cause serious damage to your organization if left unchecked. You can’t defend against what you don’t detect, so staying blind is not an option. The impact of something going wrong will resound through the organization when it finally hits, regardless if you saw it coming or not.

But what if you could see AI risks as they emerge and intervene before your organization is harmed? What if you could reap all of the benefits of AI tools without fear of exposing proprietary secrets, sensitive information, or confidential data?

This is why we created Acuvity.

In a world where security threats are constantly evolving, traditional methods of protection often fall short by only focusing on the obvious surface-level risks, leaving businesses vulnerable to the threats that lurk in the shadows. As organizations race to adopt Generative AI (Gen AI) tools, they may be unwittingly sacrificing security for convenience’s sake. Employees are constantly exploring new use cases for these tools in their everyday workflows, and when they find something that works, they’re likely to repeat it.

Imagine this: A financial advisor looking to do due diligence on a new account uploads a batch of documents into ChatGPT and asks, “What’s the average balance for this user over the last 6 months?” The tool would generate an answer, but a bigger problem is at hand. Those documents contained the account holder’s PII and sensitive financial data. This information bypassed the tool’s DLP and is now part of the algorithm. OpenAI insists that the tool does not store sensitive data, but that may not be entirely accurate.

Or take the case of an engineer working remotely, recording a Zoom call where confidential project details are discussed and whiteboards are shared. They ask an AI tool to take notes, and now every confidential piece of information shared — including the written information from the whiteboards — is now part of the LLM behind the tool. This scenario is not uncommon in the age of remote and hybrid work, but presents a significant risk for companies handling valuable intellectual property or PII/PHI.

These tasks seem harmless on the surface. So harmless, in fact, that they’re likely exactly what you had in mind when you chose to adopt productivity-boosting Gen AI tools in the first place. It’s not that your people are intentionally acting maliciously; they’re just trying to do their job more efficiently. The problem is that the most dangerous AI risks are often the ones that aren’t immediately apparent—the ones hidden in plain sight. Having maximum visibility into which employees are using which tools, and for what purpose, gives security leaders the full picture, connecting the dots between disparate pieces of information that, on their own, might seem irrelevant. Or worse, pieces of information that you didn’t even know existed.

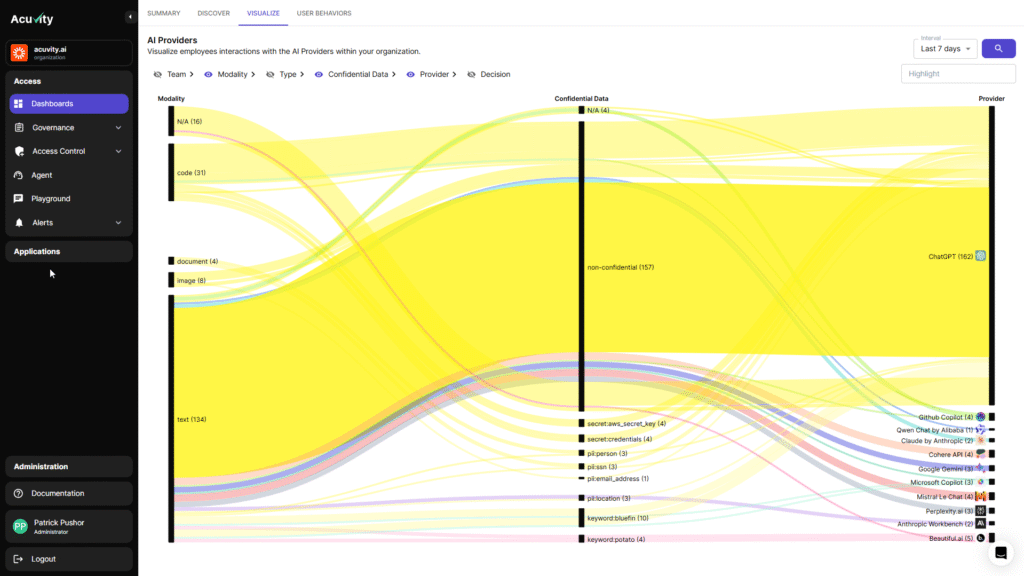

Fortify your AI Security with Acuvity Visualization

Acuvity isn’t the same old SaaS security platform you’ve been pitched a million times. Our mission is simple: empower security leaders with the ability to see what others can’t, ensuring that they can protect what truly matters. Visualization is a cornerstone of our approach, and to us, it’s far more than just a buzzword.

Our platform makes it possible to get a bird’s eye view of your organization’s threat landscape, helping you visualize AI use in your business. In one view, you can monitor all Gen AI applications, plugins and services consumed in your enterprise, including shadow AI exposure, and track which employees and teams are using risky services or plugins. Unsure what’s risky or isn’t? We help with that, too. Acuvity provides a reputation score for each Gen AI app or service used.

What sets Acuvity apart is our focus on content. We understand that the most sensitive data isn’t always found where you expect it to be. By diving deeper into the content itself, we make it possible to automate detection of confidential leakage and block or redact these Gen AI exchanges. This enables organizations to protect their most valuable assets, whether it’s confidential business plans, proprietary technology designs, or sensitive client information. With Acuvity, you can drill into specific conversations, or usage from personal vs. enterprise accounts.

Armed with this information, business leaders can build an AI governance policy for their business that is tailored to their actual threat landscape and the habits of their people rather than a blanket policy riddled with potential gaps.

We designed Acuvity to help two specific groups: security leaders and SecDevOps. We give CIOs and CISOs an AI governance platform with instant enforcement tools that they need to safely enable Gen AI, and SecDevOps gets pluggable AI security so dev teams can build their own LLMs and apps faster without adding risk. As a result, enterprises don’t just get to consume AI and LLMs safely; they also get to build new AI and LLM tools safely, too.

Traditional security methods are often reactive, aimed at responding to known threats or predefined scenarios. But if you wait, you’re too late. Acuvity doesn’t just alert you to the AI risks you already know about; we help you discover the ones you didn’t even know existed from sources you may be overlooking. We’re not just providing another layer of protection — we’re providing a new way of thinking about AI security.