How Acuvity Manages Gen AI Risk

Gen AI adoption in enterprises has surged due to productivity and efficiency gains, reduced costs, improved decision making, enhanced customer experience and several others. In 2023, about 35% of organizations reported using Gen AI, by early 2025, this figure has doubled to 70%, based on several surveys. Sectors leading in adoption include tech, fintech, professional services, healthcare and banking, While manufacturing and government are slowly catching up. While adoption is high, a very small fraction of firms have officially rolled out Gen AI organization-wide. Most deployments are still in pilot or limited rollout phases, one of the primary reasons in addition to talent and skill set gap has been security and governance concerns.

Challenges with Risk and Governance for Generative AI:

Several compliance frameworks like the EU AI Act, the National AI Commission Act in the US and sector-specific mandates (e.g., PCI-DSS, HIPAA) require strict data handling, transparency, and bias mitigation. Models trained on unvetted datasets obtained from sources on the internet risk exposing sensitive information or amplifying biases, which can lead to compliance violations easily. Enterprises are aware of their employees utilizing Generative AI for several business functions, they have very limited visibility into which Gen AI providers they use, what kind of data is being sent to these providers and how is the output utilized internally or externally within their enterprise. Uncontrolled Shadow IT is one of the biggest challenges as employees use unauthorized Gen AI chatbots, tools, bypassing security reviews and creating unmonitored attack surfaces. Lack of Safe Gen AI Usage Guidelines has resulted in security and compliance teams feeling under-prepared to manage Gen AI risks, with many lacking clear guidelines for acceptable use, auditing, or output validation.

Different aspects of adaptive risk in Gen AI and how Acuvity addresses them-

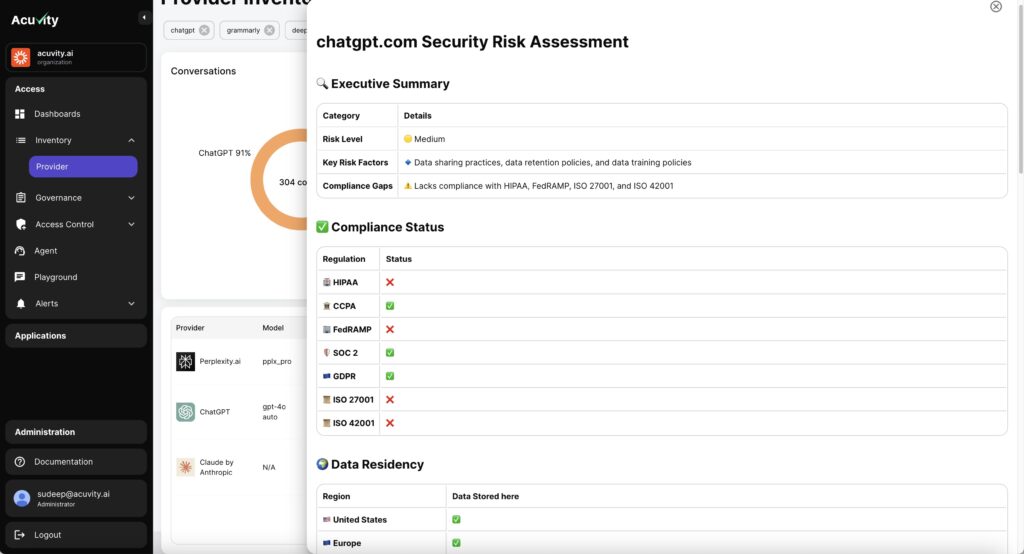

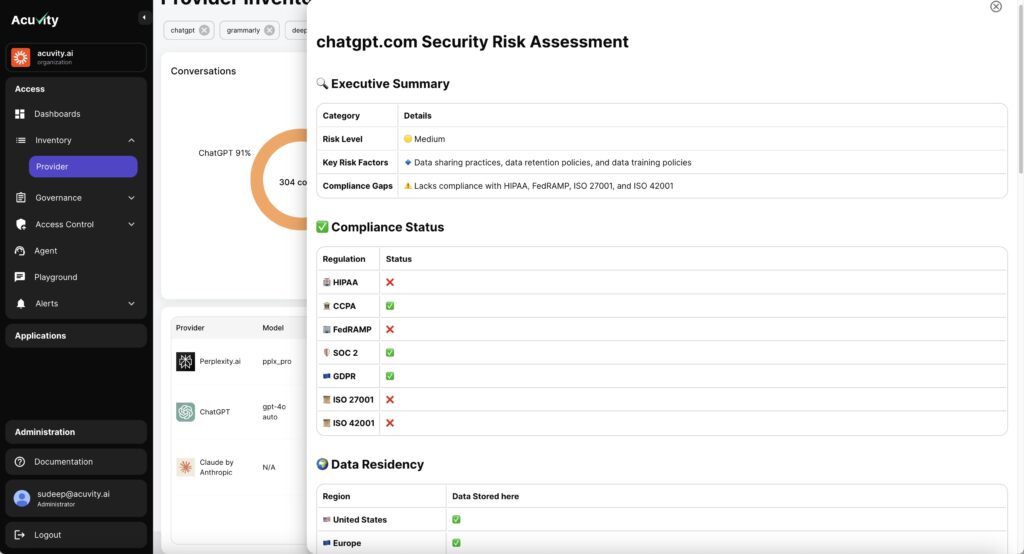

Gen AI Provider Centric Risks– Gen AI providers have access to user and enterprise’s data as they make their way into LLM’s when users access Chatbots, AI services, use agents etc. The key aspects of risks are data sharing of users’ data with third parties, not taking user consent, using the data for model training which can then make its way to other users. Data retention and Data residency are other important aspects as we saw with Deepseek where data was being stored in data centers in China. Compliance that the Gen AI providers have obtained is another important aspect as if the enterprise using the Gen AI provider is HIPAA or SOC2 compliant but the Gen AI provider is not, there are additional ramifications. Acuvity has built an adative risk engine that gives you a clear understanding of all the risks involved with Gen AI providers such as Chatbots, Co-Pilots, Tools, Plugins and other commonly used Gen AI providers.

Continuous Risk Assessment

As new Gen AI providers spring up all the time to cater to different use cases like we saw with Deepseek taking center stage in January 2025, there are thousands of others which have different underlying risks that are being utilized by enterprises. Acuvity has built an adaptive risk engine that continuously evaluates user behavior, sensitive data inputs, device signals (e.g., geolocation, device type, browser plugins etc) and malicious inputs prompts. By monitoring all Gen AI providers, co-pilots, domains, services, agents that users are accessing in an enterprise and providing real time updates on the risks associated with those providers, Acuvity can address all aspects of Shadow AI. The adaptive risk engine also considers user identity, session history, and regulatory requirements (e.g., GDPR, HIPAA) to adjust permissions and actions on a continuous basis.

User and Agent Centric Risks

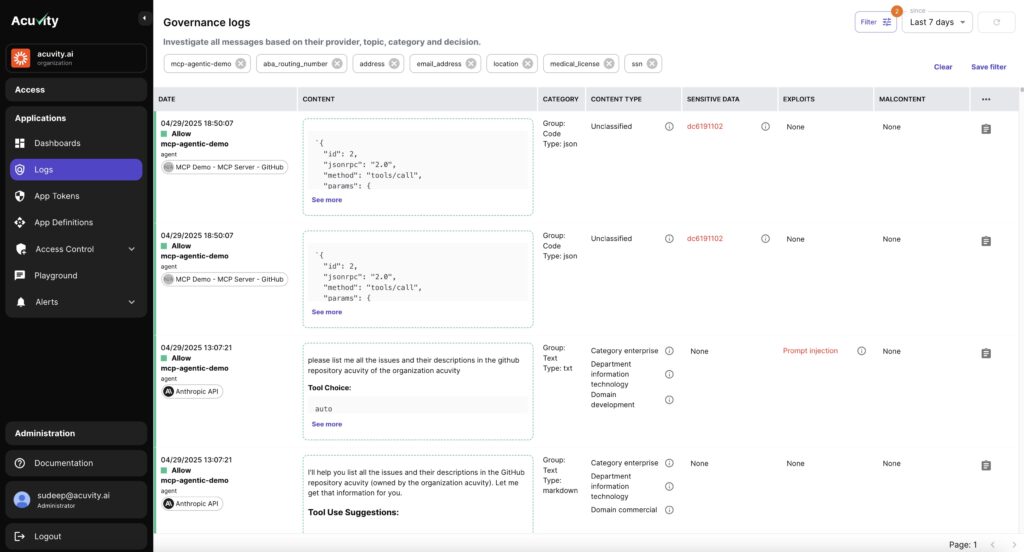

Users may knowingly or unknowingly share sensitive data (e.g., PII, trade secrets) in prompts, which could be stored, leaked, or misused by third parties (Samsung incident is a prime example). Acuvity tracks all Input and Output prompts to monitor regulated data (e.g., healthcare records, credit card details, employee records, sales forecasts) into public Gen AI tools that may breach GDPR, HIPAA, or CCPA and make the enterprise liable for those violations. It looks for both Users and Autonomous agents that may execute tasks beyond their intended scope such as unauthorized data access, financial transactions due to misaligned objectives. Overreliance and Misuse of Gen AI systems can result in blind trust in AI-generated content (e.g., code, data analysis, reports etc) that can propagate errors, hallucinations, or biased recommendations into an enterprise’s corporate assets making it very difficult to track and unwind later as data lineage is tough to implement. Acuvity has means to detect and protect enterprises from these risks to allow for safe usage of Gen AI.

Ethical and Regulatory Compliance

Acuvity Detects and corrects biases in training data, model outputs and output prompts from Chatbots and monitors them on a continuous basis utilizing the adaptive risk engine to prevent discriminatory outcomes which can have serious implications for regulated enterprises. Similarly the engine maintains transparent logs of AI decisions and risk assessments for accountability and regulatory audits.

Conclusion

New Gen AI chatbots, co pilots and other services will continue to emerge at rapid pace, the responsibility lies on the enterprises and security vendors to adopt Gen AI with appropriate security controls. I firmly believe we’ve only seen the tip of the iceberg. The rapid pace of new Gen AI providers hitting the market will only accelerate.

This makes a lot of sense