The Good for HumAnIty

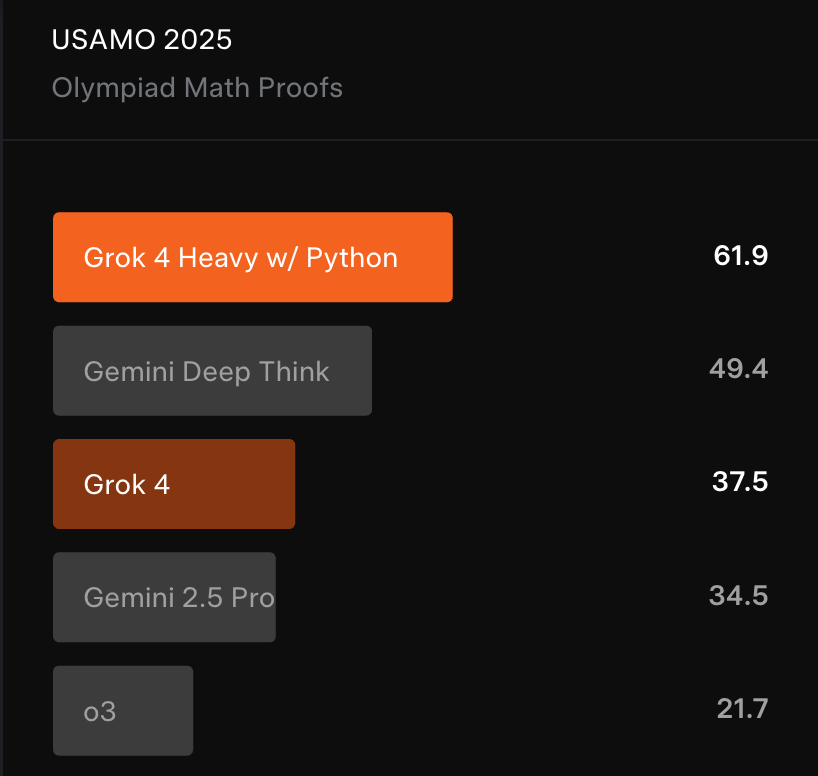

Grok 4 was released on July 9, 2025, with much fanfare—thanks to its impressive capabilities, especially in STEM, reasoning, and complex problem-solving. On challenging benchmarks like Humanity’s Last Exam and ARC-AGI2, Grok 4 set new records, demonstrating postgraduate-level intelligence. It can understand and generate text, code, and images, making it suitable for domains like healthcare, finance, research, and more.

Its multimodal design allows Grok 4 to tackle real-world reasoning and planning tasks that require integrating outputs from different modalities. These superior capabilities raise the possibility that Tesla’s robot may one day walk among us—with something close to flesh, bone, and pyramidal neurons in its cerebral cortex, powered by Grok 4.

The Bad (But Kinda Good for Hackers)

Without additional safety prompting, Grok 4 scored alarmingly low on independent security and safety benchmarks—just 0.3% for security and 0.42% for safety. In contrast, models like GPT-4o maintain much stronger baseline protections.

Security researcher Splx published a comprehensive assessment raising serious concerns. Perhaps most striking: a single-sentence user prompt can pull Grok 4 into disallowed territory—without resistance. This suggests minimal red-teaming and a rushed release with inadequate safeguards.

The Ugly

Grok 4 is vulnerable to a wide range of well-known jailbreak attacks—like Echo Chamber and Crescendo—that can lead to data leakage and compliance failures. These attacks work by subtly manipulating the model over multiple turns, coaxing it to accept harmful ideas as normal—essentially a form of AI brainwashing.

As outlined by NeuralTrust researchers, these threats are specific to Gen AI systems and well-documented. We won’t rehash their findings here, but suffice it to say: the gaps are significant.

On the content moderation front, the model was trained on vast, unmoderated datasets (notably from X), introducing bias and a high risk of generating toxic, offensive, or inappropriate content. Grok 4’s extremely low baseline security and lack of guardrails point to insufficient pre-deployment governance and testing.

So… What Now?

While hardened system prompts and runtime protections can mitigate some risks, Grok 4 clearly lacks a secure-by-default posture. In its current state, it’s not enterprise-ready without significant additional safeguards.

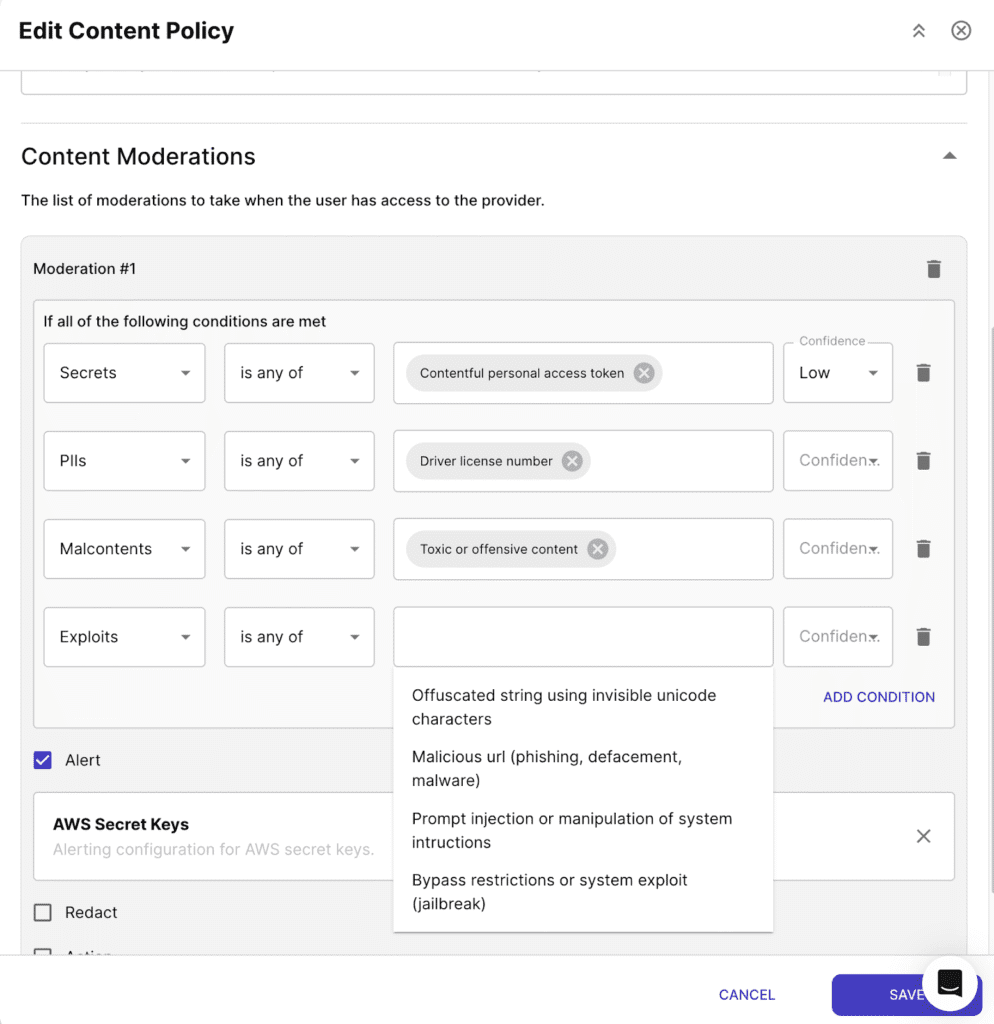

That matters—especially because the three major attack vectors Acuvity was founded to defend against are all present here:

- Runtime exploits

- Malicious or unsafe content (malcontent)

- Sensitive data exposure

If customers with high security requirements—like the Department of Defense—are to consider Grok 4, substantial hardening will be required. As a quick compensating control, exploit prevention techniques such as jailbreak detection, prompt injection filtering, privilege escalation controls, and output sanitization should be deployed on all traffic flows between users/applications and the Grok 4 provider.

For prompts that expose sensitive data, strong runtime controls to block or redact responses are urgently needed. Content moderation systems must also step in to detect and prevent toxic, biased, or harmful outputs—something Acuvity’s runtime security models are purpose-built to handle.

If your employees are experimenting with Grok 4, try Acuvity Ryno’s Gen AI Access Security module. And if your brave developers are building agents with it, we offer a free tier for securing agents built using less secure models.