The number of AI services continues to grow, with more and more tools available to employees every day. It’s becoming harder to keep up with all the new AI applications, much less understand which ones your employees can actually access or are actively using.

According to our latest State of AI Security report, 49% of respondents say they expect a security incident from Shadow AI in the next 12 months. Before you can enforce rules about AI usage, you need a way to see what’s actually happening in your organization.

This is where an AI risk assessment becomes critical.

Why Enterprises Need an AI Risk Assessment

Traditional security tools weren’t designed for the way AI operates. Firewalls and CASBs can see traffic but can’t interpret which AI services employees are connecting to, what data they’re sharing, or how those services handle that information.

AI introduces dynamic and often opaque risks, from model training with enterprise data to unverified data processors scattered across jurisdictions.

An AI Risk Assessment gives security and governance teams the foundational visibility needed to measure and manage these risks before they turn into compliance violations or data exposure events.

What is the Acuvity AI Risk Assessment?

Acuvity’s AI Risk Assessment provides organizations with a comprehensive way to understand their AI exposure. Our assessment evaluates your AI ecosystem and delivers actionable intelligence about AI usage and associated risks.

What the AI Risk Assessment Delivers

At the core of Acuvity’s approach is our agentic system for researching and extracting AI service information. This system continuously analyzes AI services (or AI domains) to gather critical data across multiple risk areas.

Service Offerings

Most Gen AI services offer multiple subscription tiers. ChatGPT, for example, provides Free, Plus, Pro, Business, and Enterprise plans. These tiers have significant implications for organizational risk, as they directly determine:

- Data handling practices

- Training data policies

- Access controls and governance features

- Compliance certifications

- Data residency options

Understanding which tier your employees are using is essential for an accurate AI risk assessment.

Data Policies

Acuvity’s risk analysis examines four critical data policy areas:

- Data Training: Customer data being used for training AI models introduces multiple overlapping risks spanning privacy, legal, security, ethical, and reputational domains. Organizations need to know whether their data may be incorporated into model training.

- Data Retention: How long Gen AI providers retain data creates risks for privacy, compliance, security, and trust. Many providers retain both training data and user interaction logs far longer than users realize, creating extended windows of exposure.

- Data Sharing: When end-user data is shared internally among provider teams or externally with third-party services and AI-as-a-service platforms, it creates new attack surfaces. Data may be exposed if entities receiving it suffer security incidents, are compromised by adversaries, or retain data longer than necessary.

- Data Residency: As companies use AI models processing data from multiple countries, uncertainties about where data is stored, processed, or transmitted create major regulatory, operational, and reputational risks. Cross-border data flows can trigger compliance obligations that organizations aren’t prepared to meet.

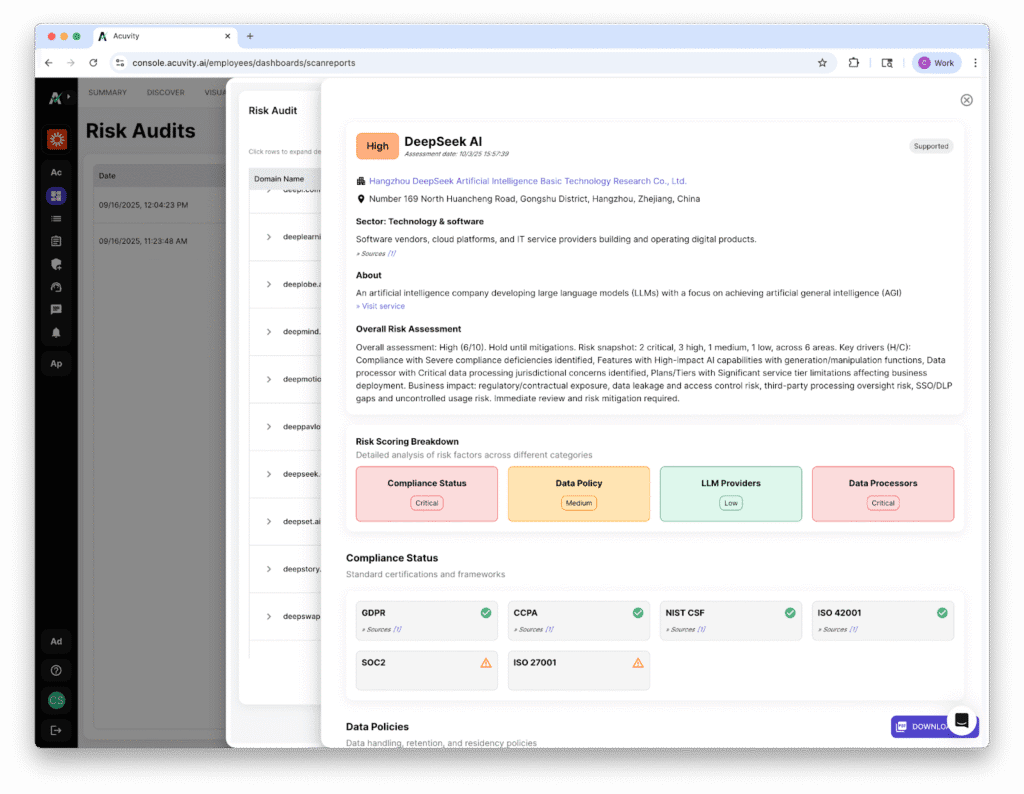

Compliance Certifications

Gen AI providers must obtain compliance certifications relevant to the industries they serve. Critical examples include:

- HIPAA for healthcare, life sciences, and medical technology

- PCI DSS for banking, payments, fintech, and e-commerce

- SOC 2 for security-conscious enterprises

- ISO 27001 for information security management

- GDPR compliance for European data protection

If an enterprise user inadvertently provides information subject to specific compliance requirements to a provider lacking those certifications, the organization faces major compliance exposure and potential regulatory action.

Data Processors

The AI Risk Report identifies third-party entities or services that process personal data on behalf of the AI provider. These data processors are subject to strict legal, security, and contractual obligations.

Understanding the full chain of data processing is essential for:

- Assessing third-party risk exposure

- Ensuring contractual protections exist

- Meeting data protection authority requirements

- Conducting vendor risk assessments

Risk Scoring Engine

Once all data is gathered and verified, Acuvity’s proprietary risk engine analyzes the information to determine potential risks associated with each domain. The engine considers multiple factors across compliance status, data policies, service tier capabilities, and data processor arrangements to generate a comprehensive risk score.

These AI Risk Reports are available directly in the Acuvity AI Security Platform and can also be exported as PDF files for sharing with stakeholders. Organizations interested in obtaining sample PDF reports can request them here.

From Assessment to Continuous Assurance

The AI Risk Assessment serves as the critical first step in establishing comprehensive Gen AI security. By correlating firewall logs with AI service access and understanding associated risks, organizations gain the visibility needed to make informed decisions.

Once the risk landscape is well understood, organizations can deploy Acuvity’s full protection capabilities:

- Browser plugin deployment for inline protection and policy enforcement

- Acushield for Mac, Windows, and Linux for endpoint-level control.

- Comprehensive governance logs tracking all Gen AI interactions

- Real-time threat detection identifying malicious or non-compliant usage

- Granular access controls enforcing organizational policies

This progression, from assessment to active protection, ensures organizations can move quickly from discovery to risk mitigation without extended deployment cycles.

How the AI Risk Assessment Works

The assessment is designed to be lightweight and non-intrusive. It can be deployed independently in a dedicated virtual machine or run directly from a laptop, making it accessible to organizations of any size.

Step 1: Environment Scanning

The assessment begins by pulling Acuvity’s public Docker image (available here) and running it with an Acuvity token. The container leverages Acuvity’s continuously updated AI Domain Knowledge Base to obtain a comprehensive list of AI service domains, including those that may pose potential risks to your organization.

The system performs multi-layer TCP/IP stack tests to assess whether each AI domain is reachable from your environment. During this process, it gathers critical metadata including:

- Whether bot protection mechanisms were triggered

- SSL certificate validity

Step 2: Log Correlation (Optional)

For deeper analysis, organizations can provide security logs, such as firewall logs, to correlate the users accessing Gen AI services. This correlation reveals:

- Which users accessed specific Gen AI services

- When those accesses occurred

- Risks associated with each access event

This log analysis serves as a critical starting point for risk assessment, helping organizations understand not just what AI services are accessible, but which ones are actively being used and by whom.

Step 3: Comprehensive Risk Analysis

Once all scans are completed, the AI Risk Assessment generates a complete report showcasing exposure to AI domains. The assessment can be configured for specific locations, user types, or network segments, providing targeted visibility where it’s needed most.

Each identified AI domain receives a Risk Score based on multiple factors, along with a detailed AI Risk Report that provides the intelligence needed to make informed decisions.

Getting Started With AI Risk Assessment

The AI Risk Assessment requires no complex installation or enterprise-wide deployment. It’s designed as a simple first step that anyone can run on a laptop or in a virtual machine.

Organizations interested in conducting an AI Risk Assessment can sign up here, using GitHub, GitLab, Google, or Hugging Face authentication.

The Bottom Line

Any organization deploying Generative AI must carefully vet, contractually bind, and continuously monitor all risk components, including data sharing, residency, processing, and compliance obligations.

Failure to do so proactively can lead to:

- Regulatory fines and enforcement actions

- Loss of business reputation and customer trust

- Intellectual property theft and data breaches

- Competitive disadvantage from security incidents

The challenge is particularly challenging in verticals like healthcare, finance, and government, where regulatory requirements are stringent and enforcement is aggressive.

Acuvity’s AI Risk Assessment has been purpose-built to address this challenge head-on. It provides complete coverage for Shadow AI discovery, data loss prevention, IP loss mitigation, and regulatory exposure reduction, all through a lightweight, easy-to-deploy solution that delivers results in hours, not weeks.

Organizations serious about AI security start with comprehensive visibility. The AI Risk Assessment provides that foundation.