Gen AI adoption has doubled to 65% from 2023 to 2024 and 75% of generative AI users are looking to automate tasks at work as per recent studies by Salesforce and Amplifai. When deciding to build or buy, companies reveal a near-even split: 47% of solutions are developed in-house, while 53% are sourced from vendors as per a recent study by Menlo VC. This has resulted in the vast majority of enterprises building applications leveraging Gen AI in house that align closely with their unique business needs, operational workflows, and strategic goals.

Enterprises have been on the journey of building specialized applications and agents such as custom chatbots, domain-specific language models, or proprietary analytics tools to get a competitive edge and iterate quickly without relying on external vendors’ timelines.

Security and Safety Concerns

As with most new transformative technologies one of the key barriers to adoption is security, more so given compared to Gen AI providers like Open AI or Anthropic enterprises building Gen AI apps in house lack the security expertise. The primary security concerns of most application development and security teams have been centered around –

- Unauthorized Access and Role Management – Many Gen AI systems lack robust role-based access control (RBAC), making it difficult to restrict who can access sensitive data or interact with AI systems.

- Sensitive Data Exposure – Gen AI applications and models often require access to vast amounts of data for training and inference. If sensitive or proprietary information is input into these systems, it may inadvertently be stored or reused, leading to privacy violations or data leaks.

- Intellectual Property (IP) Risks – Proprietary algorithms, models, or outputs generated by Gen AI systems may be stolen or reverse-engineered by competitors or attackers

- Malcontent Generation – Gen AI systems may produce biased, harmful, or factually incorrect outputs that could damage an organization’s reputation or create legal liabilities

- Runtime Exploits – Malicious actors can manipulate Gen AI systems by crafting inputs that cause the model to generate harmful or unintended outputs

Gen AI Agentic Application Security with Acuvity

We at Acuvity are excited to enhance our product capabilities to comprehensively protect Gen Based Applications enterprises are building in house to mitigate the concerns that security teams are faced with that hinders rapid adoption of Gen AI.

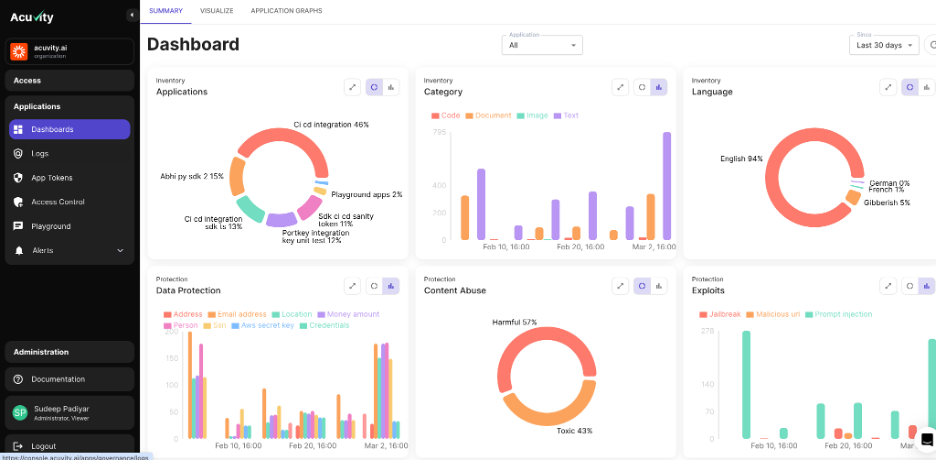

The Acuvity Application security module provides complete visibility into usage of Gen AI services by applications and agents developed in-house and provides runtime protection against exploits (Prompt Injection, Jailbreak, Malicious URL’s etc), Malcontent (toxic, bias, harmful content) and sensitive data loss (PII, secrets). All aspects of securing Gen AI applications such as Model SBOM, threat modeling, prompt fuzzing and red teaming are important. Runtime protection can yield maximum results as the nature of hacks on the Gen AI attack surface are evolving quickly, different Gen AI providers the agents/apps interact with have disparate levels of security capabilities and range of malcontent/sensitive data one can test for in house will always vary with what is seen in production. Like we have seen with other attack vectors, having a defensive shield around your applications at runtime provides enterprises the maximum confidence in deploying their apps in production safely.

Deployments

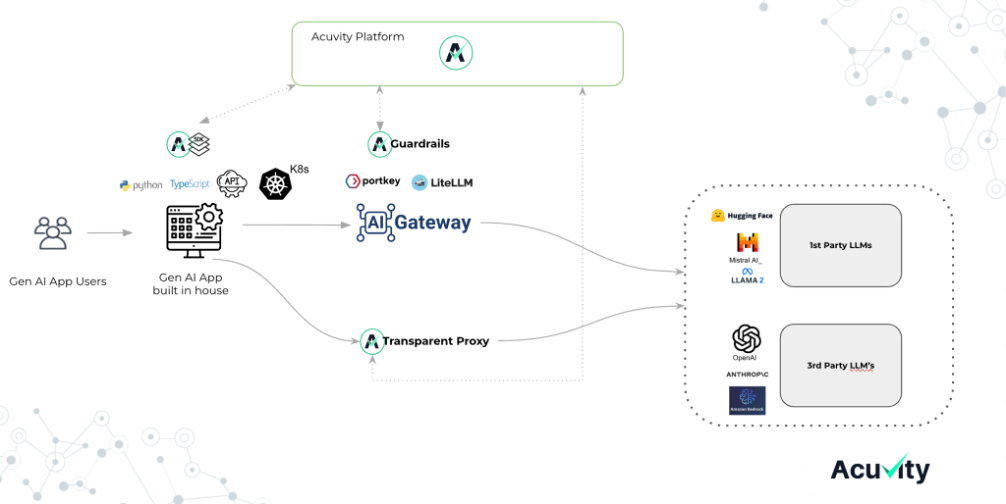

Applications leveraging Gen AI are typically deployed behind AI Gateways as they provide a single access point to multiple large language models (LLMs) or foundation models (FMs), such as OpenAI, Anthropic, or LLaMA. They tend to be developed in Python due to its simplicity, extensive libraries, and large community support.

Widely used frameworks like TensorFlow and PyTorch for deep learning, Hugging Face Transformers for natural language processing (NLP) can be leveraged easily in Python apps. Since most of these applications are leveraging GraphQL and REST API’s extensively embedding security via API’s provides an easy insertion path. For these compelling reasons the initial deployment options Acuvity will support are:

– Python/TypeScript SDK

– Gen AI Gateways like Porkey and LiteLLMz

– Scan API’s

– Transparent proxy