Background

As generative AI becomes embedded across modern enterprise workflows, organizations are under pressure to address a fast-evolving risk landscape. From employees using ChatGPT to AI agents operating autonomously, the security perimeter has shifted and traditional data governance tools are not enough. Enterprises now face a critical decision: do they retrofit legacy solutions, or adopt purpose-built platforms designed for the new frontier?

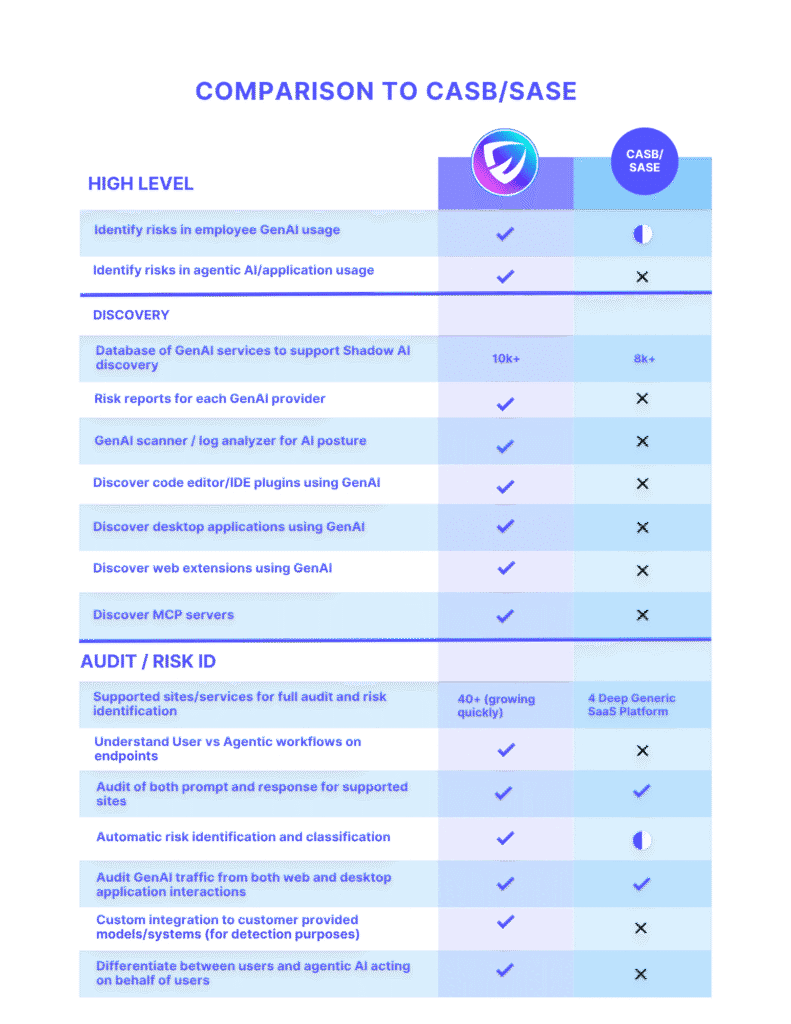

CASB and SASE platforms such as ZScaler, Netskope, CloudFlare Warp and CATO, long positioned as gatekeepers for cloud access and network security, are beginning to introduce add-on features that touch on generative AI visibility. These updates – often framed as AI activity monitoring or policy enforcement – represent an incremental step toward addressing the new risks AI introduces. Yet, when placed in context, it’s clear that CASB/SASE offerings remain fundamentally network and access control solutions. They are not designed as comprehensive Gen AI security platforms, but rather as traditional security architectures layering on limited AI-related capabilities.

Why is Gen AI different ?

Endpoint Security Evolution

AI agents (coding, copilot, workflow) are mainstreaming endpoint access beyond traditional users. This creates critical attribution gaps where organizations cannot distinguish between human and AI actions on sensitive data.

- Identity management – essential for liability and compliance – distinguishing user vs. agent actions with enterprise data

- Forensic capabilities – comprehensive audit trails to determine accountability in security incidents

- Contextual controls – content and context-aware security measures

- Multi-modal integration – supporting diverse form factors and data connections

Without proper attribution, organizations face regulatory violations, security blind spots, and inability to establish accountability when AI agents access or modify enterprise data. The shift demands attribution-focused security frameworks to manage shared endpoint responsibility.

Lots of Form Factors and Data Connectivity

Workforce is using Gen AI in many different ways:

- SaaS services like ChatGPT, Claude and other employee-person specific services. There are more than 10K of these services that exist.

- Code editor plugins such as Cline, Github copilot and 500+ other plugins

- Desktop applications like ChatGPT, Claude Desktop, Cursor and 500 or more such apps.

- Web browser extensions which are AI native

The above mentioned are using enterprise data connectors:

- Using MCP Servers and

- Enterprise Data Connectors

Enterprise users are using LLMs everywhere:

- LLMs in Cloud like AWS/Azure/GCP

- LLMs in third party GPU Clouds

- LLMs in Model PaaS like Anyscale and Together.ai

- LLMs on the endpoints with components like Ollama

Problems with Gen AI

Shadow AI Discovery: AI Sprawl is massive across all form factors and understanding what all AI components are being used is key. Most security products including CASB/SASE do not provide this visibility.

They will tell that Openai API was used but whether a user used it or an agent used it on behalf of the user and whether the agent was a reputed binary or simply downloaded from Github or other sources used and whether it should have been trusted with the credentials is not known.

Data Leakage users are uploading documents to Gen AI services such as deepseek which could be risky for an enterprise. Additionally, they are using inappropriate tiers such as ChatGPT freemium which is again very risky. Lastly, users are connecting enterprise data sources to well known services and many times not so secure LLMs without realizing the data they are leaking.

Understanding usage such as which LLM providers were used by agents deployed in code editor plugin, desktop application or web extension acted on behalf of the user and shipped enterprise data is critical.

Auditability and Forensics to understand and attribution to the user identity and agent identity is key.

Malcontent introduces no touch attacks over email or documents with prompt injections tricking agents to perform data exfiltration and similar breaches have been shown.

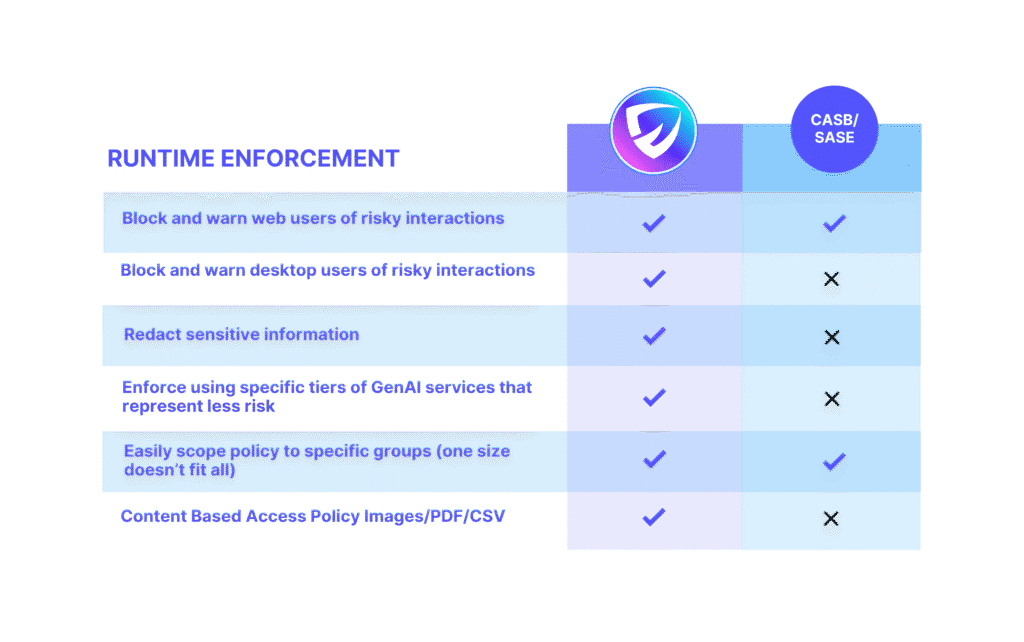

Control mechanisms need new kinds of detectors which understand multi-modal content. As more and more content can be shared with AI these detectors are needed to apply content based access control.

As more and more Gen AI services emerge, there is a need for deeper understanding and control of the features, tools and plugins provided by the services and application signatures for control entities like tiers of services. The existing solutions do not have a deep understanding of services and certainly not controls for these aspects across a broad set of applications/services.

Vendor Strengths in CASB/SASE for AI

Leading CASB/SASE vendors such as Netskope, Zscaler, Palo Alto (Prisma Access), and Cisco Umbrella have started to extend their platforms with features relevant to generative AI use. Their strengths include:

- Visibility into traffic patterns – These vendors already sit in-line with cloud and web traffic, giving them the ability to detect and report on which Gen AI services (e.g., ChatGPT, Claude, Gemini) employees are accessing.

- Policy enforcement – Through URL filtering, app categorization, and data leakage prevention (DLP) extensions, they can enforce coarse-grained controls (e.g., block uploads to certain Gen AI sites or limit access to sanctioned tools).

- Integration with broader cloud security stacks – Their CASB/SASE modules often integrate with SWG, ZTNA, and DLP, giving organizations a unified control point for cloud and web security.

- Rapid policy rollout – Because they’re already embedded in the traffic path, vendors can quickly apply new rules or templates (e.g., “restrict ChatGPT to read-only”) with minimal operational overhead.

These capabilities make CASB/SASE vendors a natural first line of defense for organizations beginning to confront Gen AI-related risks.

Why CASB/SASE Falls Short for Gen AI Security

Despite these advantages, CASB and SASE platforms fall short in addressing the core risks of generative AI:

- Lack of deep AI context – CASB/SASE can recognize domains and traffic flows, but they do not understand prompts, responses, or model behaviors. This leaves them blind to sensitive information being entered into AI tools or risky outputs generated by those tools.

- Limited to binary controls – Policies are typically allow/deny/block, not nuanced. They cannot distinguish between safe and unsafe uses of the same AI platform (e.g., querying product documentation vs. pasting regulated customer data).

- Reactive rather than adaptive – Rules are generally domain- or signature-based, meaning they only catch what’s already known (e.g., ChatGPT.com). They are not equipped to handle new AI apps, APIs, or embedded AI assistants within SaaS tools.

- No model-level risk management – CASB/SASE solutions were built for network and SaaS access control, not for monitoring or securing AI model usage. They lack mechanisms for prompt inspection, fine-grained data redaction, model usage auditing, or bias/hallucination risk mitigation.

- Not designed for Gen AI-native architectures – As enterprises adopt AI copilots, agent frameworks, and LLM-powered workflows within SaaS and internal apps, CASB/SASE visibility and control break down, since these activities often bypass traditional network chokepoints.

In short, CASB/SASE vendors provide broad but shallow coverage of Gen AI risk. They help organizations answer “Which AI apps are being used?” and “Should I block or allow them?”, but they cannot address how AI is being used or what risks that use introduces.

Acuvity: Purpose-Built for Gen AI Security

Acuvity was built from day one to address the unique challenges of securing generative AI, across both human-driven and agentic use cases. Unlike legacy tools that treat Gen AI as an edge case, Acuvity starts with the premise that AI interactions are now part of the enterprise’s core attack surface – and must be protected accordingly.

Acuvity secures both employees using third-party Gen AI tools and autonomous applications executing prompts and workflows. Through a lightweight browser extension and/or endpoint agent, Acuvity provides full visibility into how, when, and where AI tools are being used – regardless of browser, operating system, endpoint configuration, or vendor. This allows security teams to monitor shadow AI usage, enforce policy controls (block, warn, redact), and generate complete audit logs tied to verified identities or agent credentials.

For internal AI agents and autonomous workflows, Acuvity takes a different approach: by reverse-proxying API calls, it provides complete traceability and attribution across every prompt, model call, and response. This makes it possible to understand agent behavior in production, detect cascading risks (such as chained models or recursive agents), and enforce policy even in non-interactive contexts.

Unlike SASE/CASB vendors, Acuvity includes a built-in risk classifier powered by small, targeted language models that assess prompt content in real time—flagging issues like prompt injection, sensitive data exposure, jailbreak attempts, and regulatory violations. These classifications are used to drive fine-grained policy enforcement and generate actionable reports tailored for security, compliance, and executive stakeholders.

In fact Acuvity supports different risk classifiers across categories like sensitive data, exploits, language, malcontent, secret detection, and more including custom data types where you can define proprietary information that you never want to leave your borders.

The Right Tool for the Job

As organizations wrestle with how to secure their generative AI footprint, the distinction between data governance and Gen AI security becomes more than semantic.

Acuvity, was engineered precisely for this moment. It is the only platform that provides end-to-end visibility, risk analysis, and control over both employee-driven and autonomous Gen AI usage.

Securing generative AI requires a platform that understands the threat model, integrates at the right control points, and evolves with the ever-changing AI landscape.